Client¶

The provided command line client daqOcmCtl can interact with daqOcmServer which is described in the following sections.

Environment Variables¶

$OCM_REQUEST_EPSpecifies the default OCM request/reply endpoint, e.g.

zpb.rr://127.0.0.1:12345/.$OCM_PUBLISH_EPSpecifies the default OCM publish endpoint, e.g.

zpb.ps://127.0.0.1:12345/.

Command Line Arguments¶

Exhaustive command line help is available under the option --help. The following list

enumerates a subset of common commands.

Synopsis:

daqOcmCtl [options] <command> [options] <command-args>...

Standard interface commands:

std.initSends the

Init()command.std.enableSends the

Enable()command.std.disableSends the

Disable()command.std.exitSends the

Exit()command.std.setloglevel <logger> <level>Sends the

SetLogLevel()command with provided logger and level.std.getstateSends the

GetState()command.std.getstatusSends the

GetStatus()command.std.getversionSends the

GetVersion()command.

Data Acquisition commands:

daq.start [options] <primary-sources> <metadata-sources>Sends the

StartDaq()command with provided arguments.daq.startv2 [options] <specification>Sends the

StartDaqV2()command with provided specification.It is possible to read JSON from a file by prefixing path with

@, e.g.@start.json.To read from stdin the

-convention is supported (/dev/stdinworks as well) which can be used with bash heredocs, e.g:$ daqOcmCtl --json cmd.startv2 @- <<EOF { "sources": [ { "type": "primaryDataSource", "sourceName": "dcs", "rrUri": "zpb://10.127.50.10:4050/RecCmds" }, { "type": "metadataSource", "sourceName": "tcs", "rrUri": "zpb://10.127.50.15:5011/daq" } ] } EOF { "id": "TEST.2023-02-21T17:26:46.440", "error": false }

daq.stop [options] <id>Sends the

StopDaq()command with provided arguments.daq.forcestop [options] <id>Sends the

ForceStopDaq()command with provided arguments.daq.abort [options] <id>Sends the

AbortDaq()command with provided arguments.daq.forceabort [options] <id>Sends the

ForceAbortDaq()command with provided arguments.daq.getstatus <id>Sends the

GetDaqStatus()command to query status of Data Acquisition identified by<id>.daq.awaitstate <id> <state> <substate> <timeout>Sends the

AwaitDaqState()command with provided arguments.<id>Data Acquisition identifier.

<state>Data Acquisition state to await.

<substate>Data Acquisition state to await.

<timeoutTime in seconds to wait for state to be reached or unable to be reached anymore.

daq.updatekeywords <id> <keywords>Sends the

UpdateKeywords()command with provided arguments.daq.getactivelistSends the

GetActiveList()command.

Server¶

The main OCM application is daqOcmServer, which implements all the Data Acquisition control and coordination features. The interface to control Data Acquisitions is covered in section The Data Acquisition Process whereas the much simpler application state control is described in this section.

Changed in version 2.0.0: daqOcmServer interacts with daqDpmServer to execute the merge process to create the final Data Product. daqOcmServer Stores and loads relevant Data Acquisition state in its Workspace to be able to continue after application restart.

State Machine¶

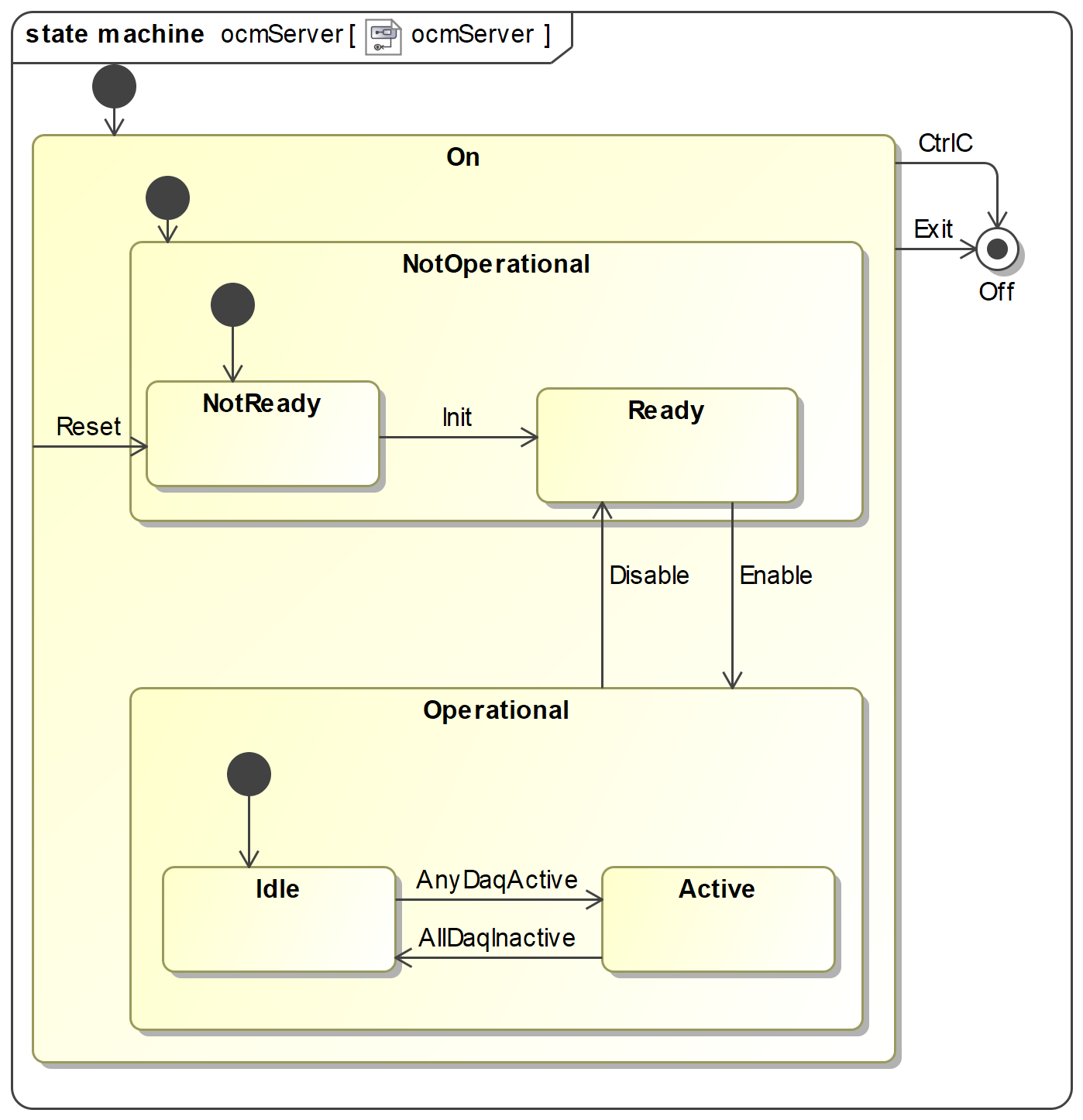

The daqOcmServer state machine is shown in Fig. 14 with states and transitions described below.

Fig. 14 Application statemachine implemented by daqOcmServer which satisfies the state machine

expected by stdif.¶

States

- On

Application is running.

- Off

Application is not running.

- NotOperational

Composite state that means that daqOcmServer is running, is able to accept

StdCmdsrequests, but is not yet fully operational. For daqOcmServer it means in particular that theOcmDaqControlinterface is not registered and won’t accept any requests.- NotReady

This is the first non-transitional state. Current implementation has already loaded configuration and has registered the

stdif.StdCmdsinterface at this point.- Ready

Has no particular meaning for daqOcmServer.

- Operational

In the transition to Operational daqOcmServer registered the

OcmDaqControlinterface and is ready to perform Data Acquisitions.- Idle

Indicates that there are no active Data Acquisitions.

- Active

Indicates that there is at least one active Data Acquisition.

Note

Active does not mean that daqOcmServer is busy and cannot handle additional requests. It simply means that there is at least one Data Acquisition is not yet finished.

Since merging is not yet implemented the definition of active is up to the point the Data Acquisition is stopped or aborted.

Transitions

- Init

Triggered by

Init()request.- Enable

Triggered by

Enable()request.- Disable

Triggered by

Disable()request.- Stop

Triggered by

Stop()request.Note

The behaviour is currently unspecified if this request is issued if OCM is in state Active.

- AnyDaqActive

Internal event that is created when any Data Acquisition becomes active.

- AllDaqInactive

Internal event that is created when all Data Acquisitions are inactive.

MAL URI Paths¶

The following tables summarize the request/reply service paths and topic paths for pub/sub.

URI Path |

Root URI Configuration |

Description |

|---|---|---|

|

|

Standard control interface |

|

|

Data Acquisition control interface |

Topic Type |

URI Path |

Root URI Configuration |

Description |

|---|---|---|---|

|

|

Standard interface status topic providing information on OCM overall state. Same information

is provided with the command |

|

|

|

Data Acquisition status topic |

Command Line Arguments¶

Command line argument help is available under the option --help.

--proc-name ARG| -n ARG(string) [default: ocm]Process instance name.

--config ARG| -c ARG(string) [default: config/daqOcmServer/config.yaml]Config Path to application configuration file e.g.

--config ocs/ocm.yaml(see Configuration File for configuration file content).--log-level ARG| -l ARG(enum) [default: INFO]Log level to use. One of ERROR, WARNING, STATE, EVENT, ACTION, INFO, DEBUG, TRACE.

--db-host ARG| -d ARG(string) [default: 127.0.0.1:6379]Redis database host address.

Environment Variables¶

$CFGPATHUsed to resolve Config Path configuration file paths.

$DATAROOTSpecifies the default root path used as output directory for e.g. OCM FITS files and other state storage. The data root can be overridden by the configuration key

cfg/dataroot.

Configuration File¶

This section describes what the configuration file parameters are and how to set them.

The configuration file is currently based on YAML and should be installed to one of the paths

specified in $CFGPATH where it can be loaded using the Config Path and the command line

argument --config ARG.

If a configuration parameter can be provided via command line, configuration file and environment variable the precedence order (high to low priority) is:

Command line value

Configuration file value

Environment variable value

Enumeration of parameters:

cfg/instrument_id(string)ESO designated instrument ID. This value is also used as the source for FITS keyword INSTRUME.

cfg/dataroot(string) [default: $DATAROOT]Absolute path to a writable directory where OCM will store files persistently. These are mainly FITS files produced as part of a Data Acquisition. If directory does not exist OCM will attempt to create it, including parent directories, and set permissions to 0774 (ug+rwx o+r).

cfg/daq/workspace(string) [default: {process name}]Workspace used by daqOcmServer to store Data Acquisition state persistently and later restore that state when starting up (see section Workspace for details). Default value is to use the process name.

Absolute paths are used as is (recommended).

Relative paths are defined relative to

cfg/dataroot.

New in version 2.0.0.

cfg/daq/stale_acquiring_hours(integer) [default: 14]Parameter used to control when to archive (discard) stale Data Acquisitions from Workspace during startup.

Specifically it controls when a Data Acquisition in state

daqif.State.StateAcquiringis automatically archived when recovered from Workspace because it is considered stale (time duration from time of creation to the time it is recovered).New in version 2.0.0.

cfg/daq/stale_merging_hours(integer) [default: 48]Parameter used to control when to archive (discard) stale Data Acquisitions from Workspace during startup.

Specifically it controls when a Data Acquisition in state

daqif.State.StateMergingis automatically archived when recovered from Workspace because it is considered stale (time duration from time of creation to the time it is recovered).New in version 2.0.0.

cfg/log_properties(string)Config Path to a log4cplus log configuration file. See also Logging Configuration for important limitations.

cfg/sm_scxml(string) [default: config/daqOcmServer/sm.xml]Config Path to the SCXML model. This should be left to the default which is provided during installation of daqOcmServer.

cfg/req_endpoint(string) [default: zpb.rr://127.0.0.1:12081/]MAL server request root endpoint on which to accept requests. Trailing slashes are optional, e.g. example:

"zpb.ps://127.0.0.1:12345/"or"zpb.ps://127.0.0.1:12345".cfg/pub_endpoint(string) [default: zpb.ps://127.0.0.1:12082/]MAL server publish root endpoint on which to publish topics from. Trailing slashes are optional, e.g. example:

"zpb.ps://127.0.0.1:12345/"or"zpb.ps://127.0.0.1:12345".cfg/oldb_uri_prefix(string) [default: cii.oldb:/elt/{process-name}]Optional CII URI OLDB prefix that is prepended to all database keys in the form

cii.oldb:/{path}. By default the process instance name is used as path element.Example:

"cii.oldb:/elt/instrument-name/ocm"New in version 2.0.0.

cfg/oldb_conn_timeout(integer) [default: 2]Timeout in seconds to use when communicating with the CII OLDB server.

cfg/dpm/req_endpoint(string)daqDpmServer request endpoint without service name.

Example:

"zpb.rr://127.0.0.1:12345".New in version 2.0.0.

cfg/dpm/pub_endpoint(string)daqDpmServer publish endpoint without service name.

Example:

"zpb.ps://127.0.0.1:12345".New in version 2.0.0.

cfg/dpm/timeout_sec(integer) [default: 5]MAL timeout used when sending requests to daqDpmServer.

New in version 2.0.0.

Full example:

cfg:

instrument_id: "TEST"

dataroot: "/absolute/output/path"

sm_scxml: "config/daqOcmServer/sm.xml"

req_endpoint: "zpb.rr://127.0.0.1:12340/"

pub_endpoint: "zpb.ps://127.0.0.1:12341/"

db_timeout_sec: 2

log_properties: "log.properties"

daq:

# Relative paths are relative dataroot,

# absolute paths are absolute.

workspace: "ocm"

# Stale DAQ configuration (determines when they are automatically

# archived at startup)

stale_acquiring_hours: 18

stale_merging_hours: 720

dpm:

# DPM communication configuration

req_endpoint: "zpb.rr://127.0.0.1:12350/"

pub_endpoint: "zpb.ps://127.0.0.1:12351/"

timeout_sec: 5

Workspace¶

New in version 2.0.0.

The daqOcmServer workspace is the designated file system area used to store Data Acquisition state information

persistently. The workspace location is controlled with the cfg/daq/workspace parameter and will

be automatically initialized if directory does not exist. To prevent against accidental

misconfiguration daqOcmServer will refuse to use the directory if it has unexpected file contents.

Note

When daqOcmServer is not running it is safe to delete the complete workspace. Be aware that if there are Data Acquisitions in progress this information will be lost.

The information stored in workspace is:

List of known Data Acquisitions.

For each Data Acquisition it stores the status, which contains the same information published as

daqif.DaqStatus.For each Data Acquisition it stores the context, which contains the necessary information to be able to create the Data Product Specification. This includes data sources, FITS keywords provided to daqOcmServer for example.

When daqOcmServer starts up it will load the stored information so it is possible to continue the

process. To avoid recovering completely obsolete Data Acquisitions there are two configuration parameters that are used to discard these, depending on whether the Data Acquisition was last known to be in

state daqif.State.StateAcquiring or py:attr:daqif.State.StateMerging:

cfg/daq/stale_acquiring_hourscfg/daq/stale_merging_hours

Important

As offline changes are not reflected in the persistent state it may happen that the recovered state is inaccurate. This is always a risk and currently daqOcmServer does not actively try to correct this.

The structure is as follows:

/Workspace root as configured via configuration file, environment variable or command line.

/list.jsonList of Data Acquisitions, as an array of Data Acquisition identifiers.

/in-progress/Root directory containing files related to each Data Acquisition.

/in-progress/{id}-status.jsonContains persistent status for each Data Acquisition (where

{id}is the Data Acquisition identifier)./in-progress/{id}-context.jsonContains persistent context for each Data Acquisition (where

{id}is the Data Acquisition identifier)./archive/When Data Acquisition is completed (transitions to state

daqif.DaqState.StateCompleted) the in-progress files are moved here.Note

Files in this directory are safe to deleted. An operational procedure is foreseen to specify when this should be done.

The following shows an example of files and directories in the workspace with an in progress Data Acquisition.

.

├── archive/

└── in-progress/

├── TEST.2021-05-18T14:49:03.905-context.json

└── TEST.2021-05-18T14:49:03.905-status.json

Loggers¶

The following loggers are used (see Logging Configuration for how to configure verbosity with log properties file):

daq.ocmGeneral application logging.

daq.ocm.managerUsed by component that manages all controllers and certain other functions.

daq.ocm.manager.awaitstateLogs details around the clients waiting for a particular state.

daq.ocm.controllerUsed by the component that controls the Data Acquisition lifecycle.

daq.ocm.eventlogUsed to log events in a more structured manner than normal logs.