9. Logging¶

Revision: |

1.8 |

|---|---|

Status: |

Released |

Repository: |

|

Project: |

ELT CII |

Folder: |

trunk/deliverables/phase10 |

Document ID: |

CSL-DOC-20-181435 |

File: |

MAN-ELT_Log.docx |

Owner: |

Rodrigo Fernández Rodríguez |

Last modification: |

March 14, 2022 |

Created: |

July 5, 2019 |

Prepared by |

Reviewed by |

Approved by |

|---|---|---|

Rodrigo Fernández Rodríguez (CSL) |

Borut Terpinc (CSL SWE) Jan Pribošek (CSL SWE) |

Gregor Čuk (CSL) |

Revision |

Date |

Changed/rev iewed |

Section(s) |

Modificatio n |

|---|---|---|---|---|

0.1 |

25.02.2020 |

rfernandez |

All |

Document creation. |

1.0 |

06.05.2020 |

rfernandez/ bterpinc |

All |

Updated all sections and added tracing. Final review. |

1.1 |

11.09.2020 |

dkumar |

9.2.3 9.2.2.2 9.2.3 |

Tracing changes. New heading 9.2.3. |

1.2 |

28.09.2020 |

rfernandez |

All |

Updates after LAT topical review |

1.3 |

28.09.2020 |

dkumar |

9.2.4 9.2.3 |

OLDB Context propagation . Mal pub/sub examples. |

1.4 |

29.09.2020 |

rfernandez |

4.8 5 |

Rearranged TOC structure. Moved String formatting and CLI tool sections |

1.5 |

15.10.2020 |

rfernandez |

2, 8.1 6.1.1 8.2.1.1, 8.2.1.2 |

Added “separation ” between logging and tracing. Added description of Filebeat multiline options. New chapters added. |

1.6 |

01.12.2020 |

rfernandez |

4 |

Fixed inconsisten cies in C++ example executables names |

1.7 |

27.01.2021 |

rfernandez |

2, 4, 6 , 7.1 |

Replaced usages of INTROOT with CIISRV_ROOT |

1.8 |

14.03.2022 |

mschilli |

5, 6, 7 |

logSend(5) now part of Tools(now 6) Added ciiLogViewer |

Confidentiality

This document is classified as a confidential document. As such, it or parts thereof must not be made accessible to anyone not listed in the Audience section, neither in electronic nor in any other form.

Scope

This document is manual for the CII Logging system.

Audience

This document is aimed at those Cosylab and ESO employees involved with the ELT Core Integration Infrastructure Software project, as well as other Users and Maintainers of the ELT Core Integration Infrastructure Software.

Glossary of Terms

API |

Application Programming Interface |

|---|---|

CII |

Core Integration Infrastructure |

MAL |

Middleware Abstraction Layer |

OLDB |

Online Database |

EA |

Engineering Archive |

CLI |

Command Line Interface |

GUI |

Graphical User Interface |

ES |

Elasticsearch |

FB |

Filebeat |

LS |

Logstash |

JSON |

JavaScript object notation |

SVN |

Subversion |

XML |

Extensible Markup Language |

YAML |

YAML Ain’t Markup Language |

TTL |

Time-To-Live |

References

ESO, Core Integration Infrastructure Requirements Specification, ESO-192922 Version 7

Cosylab, ELT CII Log Transfer Document, CSL-DOC-20-181435, Version X.X

Cosylab, ELT CII Error handling Manual Document, CSL-DOC-20-181435, Version X.X

Python logging, https://docs.python.org/3/library/logging.html

Logstash Monitoring APIs: https://www.elastic.co/guide/en/logstash/6.8/monitoring.html

Filebeat HTTP Monitoring endpoint: https://www.elastic.co/guide/en/beats/filebeat/6.8/http-endpoint.html

Logstash-to-Logstash Communication, https://www.elastic.co/guide/en/logstash/6.8/ls-to-ls.html

Logstash Settings File, https://www.elastic.co/guide/en/logstash/6.8/logstash-settings-file.html

Log4j2 Async Logging, https://logging.apache.org/log4j/2.x/manual/async.html

Logstash Reference, https://www.elastic.co/guide/en/logstash/6.8/index.html

Filebeat Reference, https://www.elastic.co/guide/en/beats/filebeat/6.8/index.html

Elasticsearch Reference, https://www.elastic.co/guide/en/elasticsearch/reference/6.6/index.html

Kibana User Guide, https://www.elastic.co/guide/en/kibana/6.6/index.html

Logtrail, https://github.com/sivasamyk/logtrail

Checker Framework, https://checkerframework.org/

Kibana Index Patterns, https://www.elastic.co/guide/en/kibana/6.8/index-patterns.html

Lucene Query Syntax, https://www.elastic.co/guide/en/kibana/6.8/lucene-query.html

Log4cplus logging macros, https://log4cplus.sourceforge.io/docs/html/loggingmacros_8h_source.html

Elasticsearch cross-cluster search, https://www.elastic.co/guide/en/kibana/6.6/management-cross-cluster-search.html

OpenTracing API docs, https://opentelemetry.io/docs/

Jaeger Tracing docs, https://www.jaegertracing.io/docs/1.17/

Jaeger Client Libraries, https://www.jaegertracing.io/docs/1.17/client-libraries/

Jaeger Trace Adjusters, https://pkg.go.dev/github.com/jaegertracing/jaeger/model/adjuster

9.1. Overview¶

This document is a user manual for usage of CII Logging and tracing systems. It explains how to use the Logging Client API through ELT CII common library and GUI Applications to interact with it. It also explains how to use the different services that comprise the distributed logging CII system.

All examples in this manual are also presented in SVN code (project logging-examples).

9.2. Introduction¶

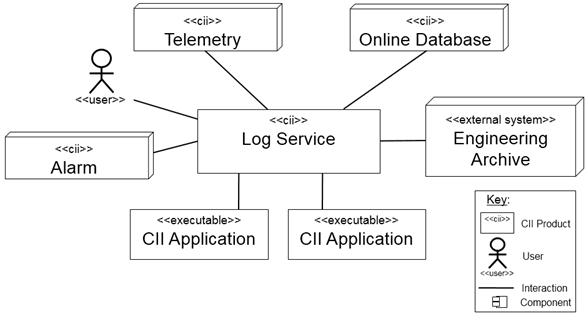

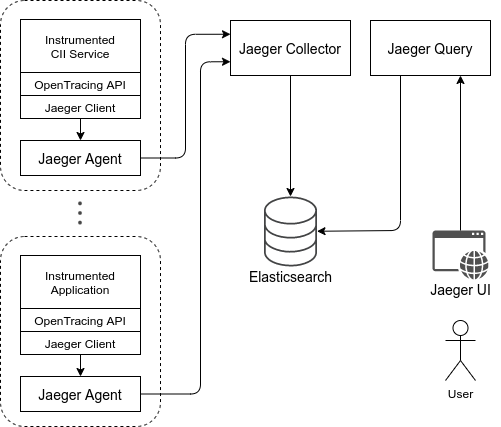

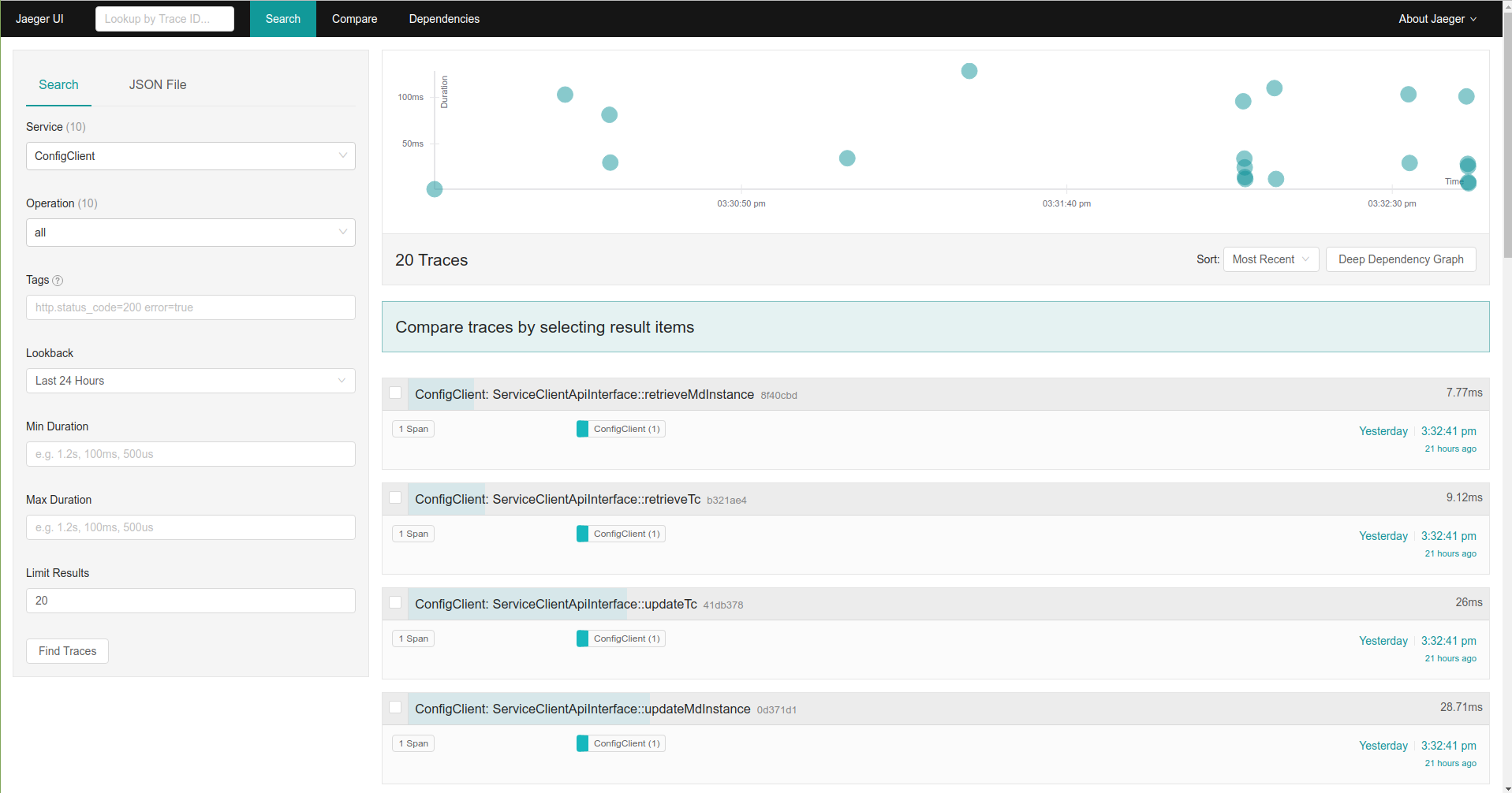

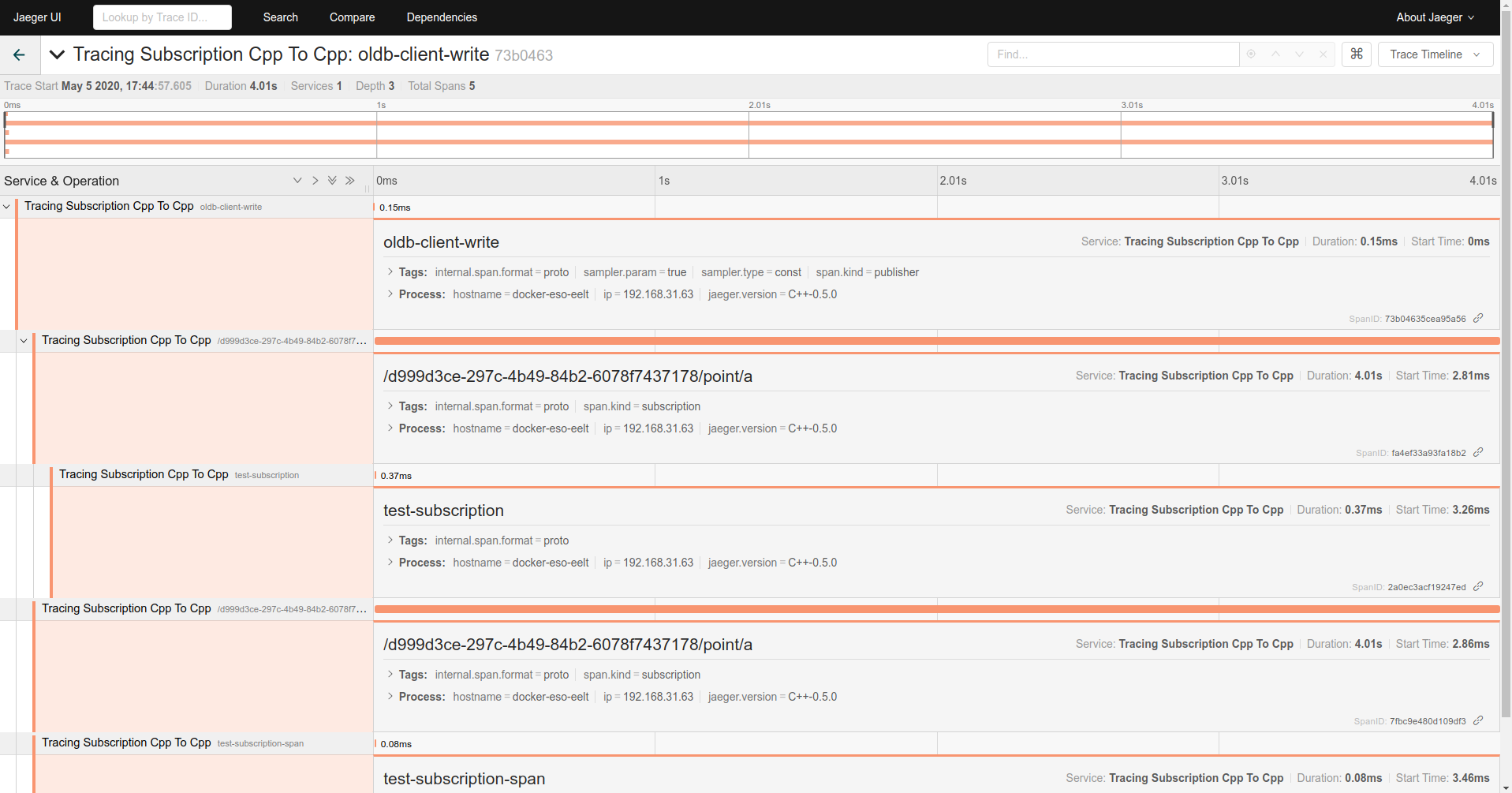

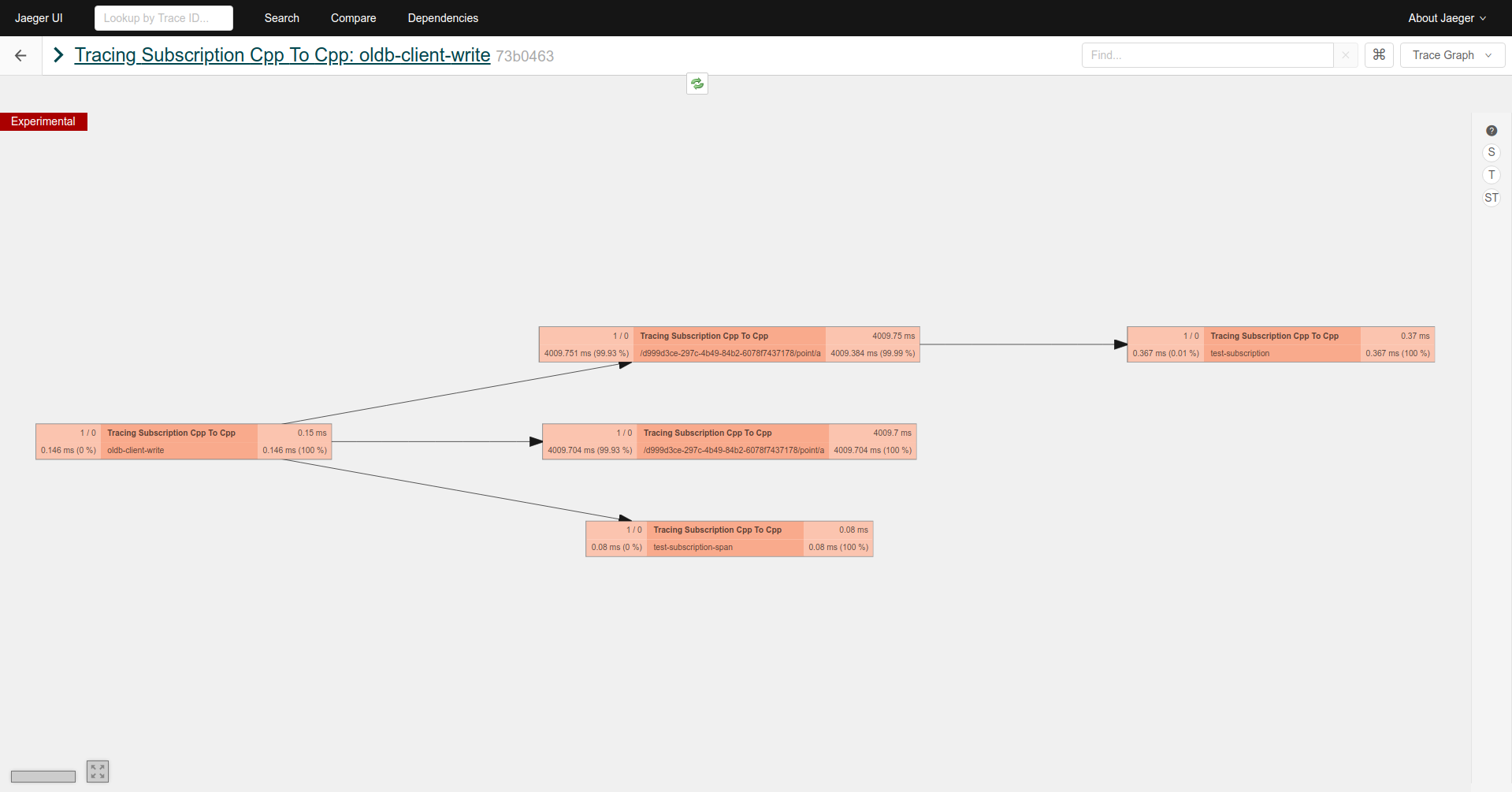

CII Log provides the logging and tracing services of the Control System. Logging pertains to all aspects of CII services. Its purpose is to provide basic and detailed information of the different services and systems in the CII in order to have a better understanding of the behaviour of the distributed system, and in particular to troubleshoot the system in case of problems. Figure 2‑1 shows how the CII Log Service interacts with other elements of the ELT Core Integration Infrastructure. Logging is considered a basic service and doesn’t use any other CII service.

Figure 2‑1 Log interaction

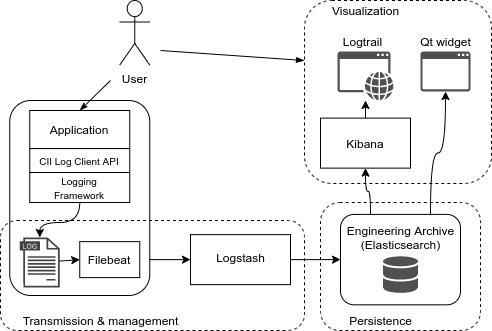

CII Log system components can be divided into four subcomponents according to their responsibilities:

Client APIs

Transmission and Management

Persistence

Data visualization

Figure 2‑2 Standard workflow of CII Log system shows the standard workflow between the different parts of the CII Log system, and the elements that comprise each of the sub-parts.

Figure 2‑2 Standard workflow of CII Log system

The CII Log Client API enables users to produce log messages from their applications using Logger objects (see 2.2). These messages are produced as strings and follow a predefined format defined in the CII Logging library (see 2.3). The API leverages on existing logging frameworks and extends them in order to provide all CII functionalities. The logging frameworks it leverages on are:

Log4j (Java) [4]

Log4cplus (C++) [5]

Python logging (Python) [6]

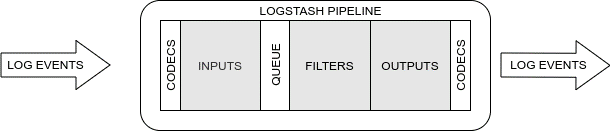

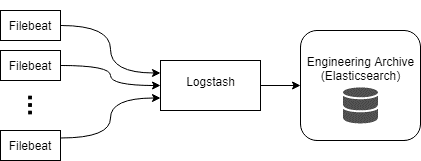

Filebeat [13] and Logstash [12] are used as transmission and management services. A Filebeat instance is responsible for harvesting CII logs from a single host (see 2.1). This log message is then wrapped as JSON format and sent to a Logstash instance.

The serialized message is not parsed to the individual log fields yet, as this is the responsibility of the Logstash instance that first receives the unparsed log event.

Apart from extracting all the CII Log fields (see Appendix B), Logstash is responsible for persisting log events to an Elasticsearch cluster, forwarding them to another Logstash instance, and/or outputting them to a file or console. In addition to this, it allows for further management and routing of the serialized log events.

Elasticsearch [14] is used as persistence backend, allowing for remote storage of log events. It is part of the same software stack as Filebeat and Logstash, therefore it provides a seamless integration of the Engineering archive with the CII Log transmission and management subsystem.

Kibana [15] with Logtrail plugin [16], as well as a Qt GUI and widget are used as visualization tools for querying and browsing CII logs.

Tracing is separated from logging, as it serves a different purpose. Tracing is explained in detail in section 8.

9.2.1. Logsink directory¶

In order to enable the CII Logging transmission and management subsystem to collect logs produced at a host using the CII Log Client API, a logsink directory is used. This directory is located in $CIISRV_ROOT/logsink, and any log file created here will be harvested by a Filebeat instance, if available on the host.

It is the responsibility of the system administrator to ensure that old unused log files are deleted regularly from the logsink directory. A cron job that periodically deletes log files that haven’t been modified for a certain amount of time can be used for this purpose.

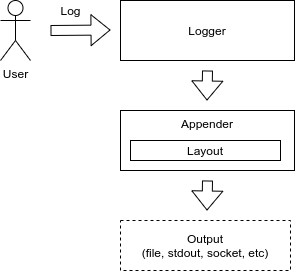

9.2.2. Loggers and Appenders¶

The architecture of the three used logging frameworks is relatively similar. The main elements of this architecture are the loggers, the appenders, and the layouts.

While the loggers are the elements responsible for exposing

the API to create log events, appenders are responsible for writing

those events to the appropriate outputs, whether it is stdout, a file, a

database, a socket, or any other.

While the loggers are the elements responsible for exposing

the API to create log events, appenders are responsible for writing

those events to the appropriate outputs, whether it is stdout, a file, a

database, a socket, or any other.

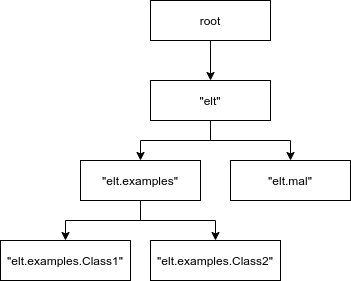

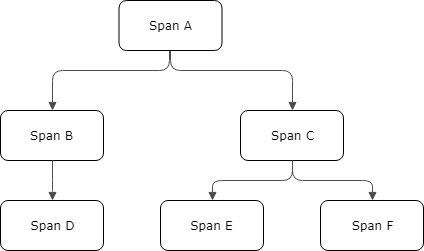

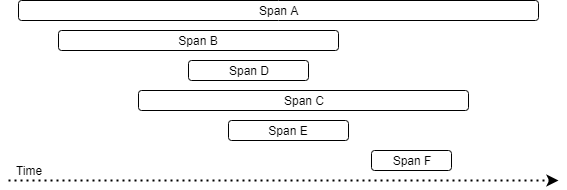

Loggers are organized in a named hierarchical structure in a parent-child relationship fashion. Each logger has a name with dot-separated components, which indicates the position of the logger in the hierarchy. An example hierarchy is shown in Figure 2‑4.

There must always be a root logger with an empty name.

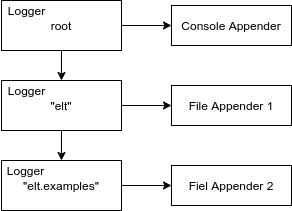

Appenders are attached to loggers. Each logger can have one or more appenders attached, and the same appender can be attached to multiple loggers.

Figure 2‑5 Loggers and Appenders example

Another possible scenario is having multiple child loggers with no appenders attached, having the appenders attached only to the root/parent logger. This can be useful to handle logs from different classes or packages through the child loggers but leveraging the output of those log messages to the parent/root logger and its appenders.

9.2.3. Log Layouts¶

Log layouts are components of the logging libraries responsible for serializing a log event into the format that will be output by the appenders, usually a string.

CII Logging library provides two different layouts that produce plain strings according to the CII Log format:

CiiLayout

CiiSimpleLayout

The CiiLayout is intended both for human and machine consumption, and it must be used for logs written to the logsink directory (see 2.1). It contains the fields described in Appendix B, in the same order as shown there.

The CiiSimpleLayout is a simplified version and is intended for human consumption. It is composed of the date and time of the log event, the log type, a log ID, the message and all the optional fields described in Appendix B.

Additional details and usage instructions can be found in section 4.4.

Example log messages using both the CiiLayout and the CiiSimpleLayout are included in section 4.5 as well as examples how to use it in configurations are included in section 4.3.

Appendix B describes the fields of which a CII log is composed. All optional fields can be added to the log message using the CII Log Client API. See section 4.7 for further details.

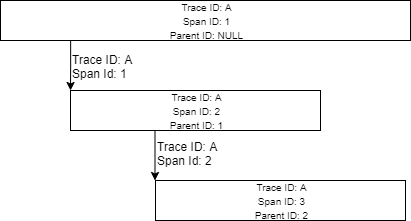

TraceID and SpanID are used to log information about the current tracing context (see section 8).

ErrorTypeName, ErrorDateTime, ErrorMessage, and ErrorTrace are used to log a formatted CiiException.

9.2.4. Postmortem buffer¶

CII Log Client API offers a logs postmortem buffer facility. It enables users to obtain on demand logging information that otherwise would have been lost due to filtering by the active logging configuration.

The postmortem buffers stores produced log events for all logging levels, regardless of the filtering configurations of the loggers and appenders.

This buffer is time-bound. Events are evicted after a defined time is elapsed. The time-to-live (TTL) for the buffered log events can be configured by the user.

The flushing of this buffer is triggered on demand through the CII Log Client API. When this happens, the buffered events are flushed to the appropriate destinations.

By default, this buffer is disabled. Configuration and usage instructions can be found in section 4.8.

9.3. Prerequisites¶

This section describes the prerequisites for using the CII Logging libraries and applications. Note that the preparation of the environment (i.e. installation of required modules, configuration, running of services) is not in the scope of this document. Consult your administrator and the Log TRD document for information on how to establish the environment for usage of CII Logging libraries and applications.

9.3.1. WAF Modules¶

For using the CII Logging and tracing libraries and CLI tools, the following modules must be built and installed with WAF in the specified order:

MAL, (elt-mal)

Client APIs (client-api)

For using the Logging Qt GUI application and Logging Qt widgets, in addition to the above, the following module must be built and installed:

Qt Widgets library (elt-qt-widgets)

9.3.2. Services¶

This section describes the services used by logging. These services should be managed and maintained by system administrators. For details on how to use the CII Log Client API, skip to section 3.3.

9.3.2.1. Logging Services¶

The services described below are used for transmission, management, persistence, and querying of distributed CII logs. It must be noted that they are not necessary in order to use the Logging Client API.

Distributed CII Logging management is handled using the Elastic stack, which is comprised of the following components:

Filebeat [13]

Logstash [12]

Elasticsearch [14]

Kibana [15]

9.3.2.1.1. Filebeat¶

Filebeat is a lightweight log harvester and shipper service. It is used by CII Logging system to collect logs produced by applications using the CII Log Client API. Each host producing logs that need to be collected from logsink directory requires one properly configured instance of Filebeat running.

See the Log TRD document on how to set up the Filebeat service. Configuration instructions can be found in section 6.1.

9.3.2.1.2. Logstash¶

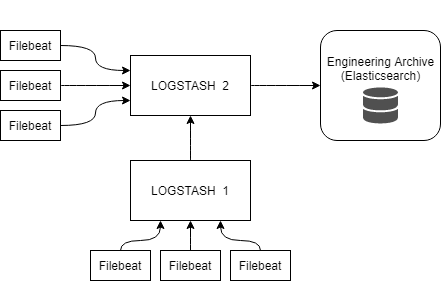

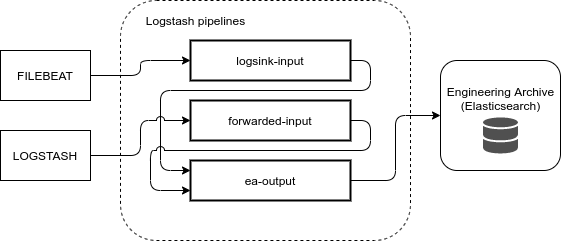

Logstash is a server-side data processing pipeline service. It is used by the CII Logging system to ingest and parse the log events sent by one or many Filebeat instances, or to receive the log events forwarded by another Logstash instance.

These log events can then be processed by any number of output handlers, such as handlers for persistence to an ref::Elasticsearch instance, forwarding to another Logstash instance, writing to a file, or to stdout. How the log events are passed to the Logstash outputs can be managed based on the log event properties, such as the level or the audience.

At least one instance of Logstash is necessary to process and persist the log events collected by the Filebeat instances.

See the Log TRD document on how to set up the Logstash service. Configuration instructions can be found in section 6.2.

9.3.2.1.3. :: _Elasticsearch:¶

Elasticsearch is a RESTful distributed document-oriented database and a search and analytics engine. It is used by CII Logging system as a persistence database for logs produced using the CII Log Client API in the distributed system.

It is required to have at least one Elasticsearch instance to be able to persist the log events.

See the Log TRD document on how to set up the Elasticsearch service.

9.3.2.1.4. Kibana¶

Kibana is a frontend application that provides search and data visualization capabilities for data indexed in Elasticsearch. Kibana is used by CII Logging system, together with the Logtrail plugin [16], in order to allow users to query and browse persisted CII logs.

At least one Kibana instance must be available for the user to be able to browse the persisted logs. Each Kibana instance is configured to use one Elasticsearch instance or cluster. It is also possible to configure a Kibana instance to query to other remote Elasticsearch clusters.

See the Log TRD document on how to set up the Kibana service and the Logtrail plugin. Configuration instructions for Kibana and the Logtrail plugin can be found in section 7.2.

9.3.3. Includes/Imports¶

For basic usage of the Logging library, the user needs the CiiLogManager class. This class contains the API for interacting with the underlying Logging framework using the CII Log extensions, whether it’s Log4j (Java), Log4cplus (C++), or Python logging.

It provides methods for configuring the logging system, getting a Logger object, or flushing the postmortem buffer. Detailed API per language is provided in Appendix A. Additional details can be found in section 4.1.

In addition, the language-specific Logger and Level classes shall be imported. Level class is not necessary if convenience log methods for the different levels are used, such as debug(“message”) instead of log(Level.DEBUG, “message”).

C++ also needs to include ciiException class, since the ciiLogManager configuration methods can throw an exception in case of an error during configuration initialization.

For more advanced use, it is necessary include the CiiLogMessageBuilder class. The CiiLogAudience enum must be imported as well if Audience field value is provided. Also, the appropriate CiiException class must be imported.

These includes, in addition to linked libraries, are shown in the example code in logging-examples module.

9.3.3.1. Java¶

import org.apache.logging.log4j.Logger;

import org.apache.logging.log4j.Level;

import elt.log.CiiLogManager;

import elt.log.CiiLogMessageBuilder:

import elt.log.CiiLogAudience;

import elt.log.layout.CiiSimpleLayout;

import elt.log.layout.CiiLayout;

9.3.3.2. C++¶

#include <log4cplus/loggingmacros.h>

#include <ciiLogManager.hpp>

#include <ciiLogMessageBuilder.hpp>

#include <ciiLogConfigurator.hpp>

#include <ciiLogAudience.hpp>

#include <ciiException.hpp>

9.3.3.3. Python¶

import logging

from elt.log import CiiLogManager

from elt.log import CiiLogMessageBuilder

from elt.log import CiiLogAudience

9.4. Logging Library Usage¶

This section describes the CII Logging Client API, and explains through examples how to configure and use the CII Logging API library in an application.

The CII Log Client API is split between two classes. The CiiLogManager and the CiiLogMessageBuilder.

Because of the fact that Cii Log Client API leverages on different libraries for Java (Log4j), C++ (Log4cplus) and Python (Python logging), and the constraints this imposes due to the different implementations and APIs, the CII Log client API methods signatures vary across languages and some methods are only available for certain languages.

A complete description of the CII Log Client API for Java, C++, and Python can be found in Appendix A.

9.4.1. CiiLogManager¶

This is the main class of the CII Log Client API. It provides static methods for configuring and initializing the application’s logging, retrieving, creation of logger objects used to log messages, or flushing of the post-mortem appender.

The loggers retrieved using the CiiLogManager are used according to the APIs of the specific logging frameworks for each language [4][5][6].

9.4.2. CiiLogMessageBuilder¶

The CiiLogMessageBuilder is aimed at helping users to create correctly formatted log messages with extended information, such as:

Audience

Tracing context

CII Error messages

User-defined log ID

See section 4.7 for usage details and examples.

9.4.3. Logging Configuration¶

Each of the three logging libraries CII Log Client API provides different ways of configuration. The following sections explain how logging is configured for Java, C++, and Python.

If logging is initialized without providing any custom configuration, a default configuration will be used. This configuration will have:

A root logger configured to an ERROR level with two appenders attached.

An stdout appender using a CiiSimpleLayout layout.

A rolling file appender to logsink directory using a CiiLayout. This appender logs to a file with the name eeltdev-output-{timestamp}.log and rotated files with file names following the pattern eeltdev-output-{timestamp}.%i.log, where %i is the index of the rotated file.

If using default configuration, logs will be output to console and logsink directory only if $CIISRV_ROOT environment variable is defined, otherwise logs will be only written to console and warning will be issued to the user.

Examples of default configuration files for Log4j, Log4cplus, and Python are shown in sections 4.3.1, 4.3.2, and 4.3.2.

It is relevant to notice the default path for any file creation or search is the compilation folder generated when the ‘waf build’ command is called. So any developer string containing a specific path file should be relative to the project build directory.

9.4.3.1. Java¶

Due to extensions to the Log4j library, only a subset of the configuration options is supported when using CII Log Client API. There is no support for configuring logging using Log4j’s ConfigurationBuilder.

The following file configuration options are available:

XML

Properties

JSON

YAML

By default, Log4j will look for a file called log4j2.xml in the classpath. If this file is not found, it will fall back to the default CII logging configuration. If there’s any issue creating the default CII configuration, it will fall back to the default Log4j configuration, which consists of a root logger with an ERROR level and a console appender attached to it.

It is possible to specify which configuration file will be loaded by Log4j by setting the system property log4j.configurationFile to the path of the configuration file.

It can be passed as a command line argument as:

-Dlog4j.configurationFile=path/to/log4j2configFile.xml

Or set at runtime before Log4j has been initialized:

System.setProperty(“log4j.configurationFile”, “path/to/log4jconfigFile.xml”)

It is also possible to initialize a custom configuration dynamically using the Log4j Configurator class:

Configurator.initialize(“configuration_name”, “path/to/log4jconfigFile.xml”)

Below it is shown how an XML with a configuration equivalent to the default CII configuration would like.

Listing 4‑1 Example Java default configuration

<?xml version="1.0" ?>

<Configuration name="1584706371399" status="WARN">

<Appenders>

<Console name="ConsoleAppender" target="SYSTEM_OUT">

<CiiSimpleLayout/>

</Console>

<RollingFile name="FilesinkFileAppender" append="true"

createOnDemand="true"

fileName="${env:CIISRV_ROOT}/logsink/eeltdev-output-1584706371399.log"

filePattern=

"${env:CIISRV_ROOT}/logsink/eeltdev-output-1584706371399.%i.log">

<Policies>

<SizeBasedTriggeringPolicy/>

</Policies>

<CiiLayout/>

</RollingFile>

</Appenders>

<Loggers>

<Root level="ERROR" includeLocation="true">

<AppenderRef ref="ConsoleAppender"/>

<AppenderRef ref="LogsinkFileAppender"/>

</Root>

</Loggers>

</Configuration>

Note the use of CiiLayout and CiiSimpleLayout. These are loaded as plugins by Log4j on initialization. It is required for this to work as intended that class CiiLogManager is loaded by the classloader before Log4j is initialized. CII Log layouts are described in more detail in section 4.4.

To troubleshoot the initialization, it is possible to enable the debug level on the internal Log4j StatusLogger by setting system property log4j2.debug. If this property is set, Log4j will output its internal logs to console.

-Dlog4j2.debug=true

9.4.3.2. C++¶

In addition to the default configuration, the CII Log Client API provides support for custom configuration of the Log4cplus logging framework. Two configuration options are available:

Properties object

Properties file

The CiiLogManager class offers methods that can take either an object or a file containing Log4cplus configuration options. It also provides a method without arguments that uses the default CII configuration. Listing 4‑2 shows an example properties configuration equivalent to the default one.

Listing 4‑2 Example C++ default configuration

log4cplus.appender.ConsoleAppender=log4cplus::ConsoleAppender

log4cplus.appender.ConsoleAppender.layout= elt::log::layout::CiiSimpleLayout

log4cplus.appender.LogsinkFileAppender=log4cplus::RollingFileAppender

log4cplus.appender.LogsinkFileAppender.File=

${CIISRV_ROOT}/logsink/eeltdev-output-1584706371399.log

log4cplus.appender.LogsinkFileAppender.layout=elt::log::layout::CiiLayout

log4cplus.rootLogger=ERROR, ConsoleAppender, LogsinkFileAppender

Detailed CiiLogManager API can be found in Appendix A.

9.4.3.3. Python¶

Three configuration modes are supported:

Default CII Log configuration

Custom dictionary-based configuration

Custom file configuration

If a file configuration is used, the file should contain the equivalent to a dictionary based configuration.

In Listing 4‑3 an example of a configuration equivalent to the default configuration can be seen.

Listing 4‑3 Example Python default configuration

'version': 1,

'cii_log_buffer': {

'enabled': False,

'ttl_ms': 60000

},

'handlers': {

'cii-logsink': {

'class': 'logging.handlers.RotatingFileHandler',

'filename': ${CIISRV_ROOT}/logsink/eeltdev-output-1584706371399.log,

'maxBytes': 10 * 1024 * 1024,

'backupCount': 5,

'formatter': 'cii'

},

'cii-console': {

'class': 'logging.StreamHandler',

'formatter': 'cii-brief',

'stream': 'ext://sys.stdout',

'level': 'ERROR'

}

},

'root': {

'level': 'ERROR',

'handlers': ['cii-logsink', 'cii-console']

}

Detailed API can be found in Appendix A.

9.4.4. Log Layouts¶

Two CII Log Layouts are provided:

CiiLayout

CiiSimpleLayout

These layouts are described in section 2.3.

Examples on how to use the CII Log Layouts can be found in the configurations shown in section 4.3.

9.4.4.1. Java¶

The CII Log Layouts are provided as Log4j plugins, implemented by the classes CiiLayout and CiiSimpleLayout. They can be used as any other Log4j layout plugin. Usage example can be found in Listing 4‑1.

9.4.4.2. C++¶

The CII Log Layouts are provided as Log4cplus plugins, implemented by the classes CiiLayout and CiiSimpleLayout. They can be used as any other Log4j layout plugin. Usage example can be found in Listing 4‑2.

9.4.4.3. Python¶

To use the CII Log Layouts, the cii and cii-brief formatters can be used to enable the CiiLogLayout and the CiiSimpleLayout respectively. The definition of those formatters is injected in the configuration by the CiiLogManager configuration methods. Usage example can be found in Listing 4‑3.

9.4.5. Basic usage example with default configuration¶

The following examples show a basic usage workflow of the CII Logging API to produce log messages of different levels using a default CII configuration, as described in 4.3.

9.4.5.1. Java¶

The example shown in Listing 4‑4 can be run from the root directory of the logging-examples module with the run-logging-example-default-config provided script.

Listing 4‑4 Java basic example

public class LoggingBasicCustomExample {

public static void main(String[] args) {

/* Automatically initialize the logging framework and get the root

* logger.

* No configuration is provided so a default configuration is used.

* This default configuration has two appenders: one that outputs to

* stdout using CiiSimpleLogLayout, and another one that outputs to a

* rolling file in $CIISRV_ROOT/logsink directory

* Only log events of levels ERROR and FATAL will be output to the

* appenders.

*/

Logger rootlogger = CiiLogManager.getLogger("");

/* Send INFO level message to root logger

* This log will be discarded since the minimum log level for the root

* logger is ERROR

*/

rootlogger.log(Level.INFO, "First root logger message");

/* Send ERROR level message to root logger

* This log will be passed to the appenders for processing, since the

* minimum log level for the root logger is ERROR

*/

rootlogger.log(Level.ERROR, "Second root logger message");

/* Get a logger with name 'elt.log.test'. There's no logger configured

* with that name, therefore it will be created inheriting the

* configuration of it's nearest ancester in the loggers named

* hierarchy. In this case, since there is only root logger

* configured, the configuration of the root logger will be used.

*/

Logger logger = CiiLogManager.getLogger("elt.log.test");

/* Send INFO level message to elt.log.test logger

* This log will be discarded since the minimum log level is ERROR

*/

logger.log(Level.INFO, "First elt.log.test logger message");

/* Send ERROR level message to elt.log.test logger

* This log will be passed to the appenders for processing, since the

* minimum log level is ERROR

*/

logger.log(Level.ERROR, "Second elt.log.test logger message");

}

}

The logs output produced by this example would be the following in stdout:

2020-03-19T20:05:03.104+0100, ERROR, elt.LoggingBasicDefaultExample/main, Second root logger message

2020-03-19T20:05:12.571+0100, ERROR, elt.LoggingBasicDefaultExample/main, Second elt.log.test logger message

And in log file in logsink directory:

Cref=LoggingBasicDefaultExample.java-31, Date=2020-03-19T20:05:03.104+0100, HostName=docker-eso-eelt, LogType=ERROR, SourceID=elt.LoggingBasicDefaultExample/main, LogID=elt.log.CiiLogManager.main-31, Message=Second root logger message

Cref=LoggingBasicDefaultExample.java-49, Date=2020-03-19T20:05:12.571+0100, HostName=docker-eso-eelt, LogType=ERROR, SourceID=elt.LoggingBasicDefaultExample/main, LogID=elt.log.test.main-49, Message=Second elt.log.test logger message

9.4.5.2. C++¶

This example shown in Listing 4‑5 can be run with the $CIISRV_ROOT/bin/logging-example-app executable file installed by the logging-examples module.

Listing 4‑5 C++ basic example

int main(int ac, char *av[]) {

try {

/* Automatically initialize the logging framework and get the root logger.

* No configuration is provided so a default configuration is used. This

* default configuration has two appenders: one that outputs to stdout

* using CiiSimpleLogLayout, and another one that outputs to a rolling

* file in $CIISRV_ROOT/logsink directory

* Only log events of levels ERROR and FATAL will be output to the

* appenders.

*/

log4cplus::Logger root_logger = ::elt::log::CiiLogManager::GetLogger();

std::vector<log4cplus::SharedAppenderPtr> appenders = root_logger.getAllAppenders();

for (const auto& appender: appenders) {

std::cout << "Appender:" << appender->getName() << '\n';

}

/* Send INFO level message to root logger

* This log will be discarded since the minimum log level for the root

* logger is ERROR

*/

root_logger.log(log4cplus::INFO_LOG_LEVEL, "First root logger message");

/* Send ERROR level message to root logger

* This log will be passed to the appenders for processing, since the

* minimum log level for the root logger is ERROR

*/

root_logger.log(log4cplus::ERROR_LOG_LEVEL, "Second root logger message");

/* Get a logger with name 'elt.log.test'. There's no logger configured

* with that name, therefore it will be created inheriting the

* configuration of it's nearest ancester in the loggers named hierarchy.

* In this case, since there is only root logger configured, the

* configuration of the root logger will be used.

*/

log4cplus::Logger logger = ::elt::log::CiiLogManager::GetLogger("elt.log.test");

/* Send INFO level message to elt.log.test logger

* This log will be discarded since the minimum log level is ERROR

*/

root_logger.log(log4cplus::INFO_LOG_LEVEL,

"First elt.log.test logger message");

/* Send ERROR level message to elt.log.test logger

* This log will be passed to the appenders for processing, since the

* minimum log level is ERROR

*/

root_logger.log(log4cplus::ERROR_LOG_LEVEL,

"Second elt.log.test logger message");

return 0;

} catch (const ::elt::error::CiiException& ex) {

std::cerr << "CiiException occured while executing sample code. What: "

<< ex.what() << '\n';

}

return -1;

}

This would produce the following output on stdout:

2020-04-19T21:49:41.894+0200, ERROR, root/140400261358272, Second root logger message

2020-04-19T21:49:41.894+0200, ERROR, root/140400261358272, Second elt.log.test logger message

And this one on the log file in logsink directory:

Cref=../cpp/logging-app/src/logging-app.cpp-35, Date=2020-04-19T21:49:41.894+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID=140400261358272, LogID=root.main-35, Message=Second root logger message

Cref=../cpp/logging-app/src/logging-app.cpp-53, Date=2020-04-19T21:49:41.894+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID=140400261358272, LogID=root.main-53, Message=Second elt.log.test logger message

9.4.5.3. Python¶

This example shown in Listing 4‑6 can be run with the $CIISRV_ROOT/bin/cii-logging-example-app-py executable file installed by the logging-examples module.

Listing 4‑6 Python basic example

def main():

"""

Main application code

@return status code, int

"""

result = 0

try:

# Automatically initialize the logging framework and get the root

# logger.

# No configuration is provided so a default configuration is used.

# This default configuration has two handlers: one that outputs to

# stdout using elt.log.CiiLogConfigurator.CII_SIMPLE_LOG_PATTERN and

# another one that outputs to a rolling file in $CIISRV_ROOT/logsink

# directory.

# Only log events of levels ERROR and FATAL will be output to the

# handlers.

root_logger = CiiLogManager.get_logger()

# Send INFO level message to root logger

# This log will be discarded since the minimum log level for the root

# logger is ERROR

root_logger.info("First root logger message")

# Send ERROR level message to root logger

# This log will be passed to the handlers for processing, since the

# minimum log level for the root logger is ERROR

root_logger.error("Second root logger message")

# Get a logger with name 'elt.log.test'. There's no logger configured

# with that name, therefore it will be created inheriting the

# configuration of it's nearest ancestor in the loggers hirearchy. In

# this case, since there is only root logger configured,

# the configuration of the root logger will be used

logger = CiiLogManager.get_logger("elt.log.test")

# Send INFO level message to elt.log.test logger.

# This log will be discarded since the minimum log level is ERROR

logger.info("First elt.log.test logger message")

# Send ERROR level message to elt.log.test logger

# This log will be passed to the handlers for processings, since the

# minimum log level is ERROR

logger.error("Second elt.log.test logger message")

except Exception as e:

print('Exception occured while executing sample code: %s', e)

result = 5

return result

if __name__ == '__main__':

sys.exit(main())

This would produce the following output on stdout:

2020-04-19T21:49:41.894+0200, ERROR, cii-logging-example-app-py/MainThread, Second root logger message

2020-04-19T21:49:41.894+0200, ERROR, cii-logging-example-app-py/MainThread, Second elt.log.test logger message

And this one on the log file in logsink directory:

Cref cii-logging-example-app-py.main-35, Date=2020-04-19T21:49:41.894+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID= cii-logging-example-app-py/MainThread, LogID=main-35, Message=Second root logger message

Cref= cii-logging-example-app-py.main-50, Date=2020-04-19T21:49:41.894+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID= cii-logging-example-app-py/MainThread, LogID=main-50, Message=Second elt.log.test logger message

9.4.6. Basic usage example with custom configuration¶

This section shows how the use a custom configuration. In this case, we have a configuration with two different loggers, and each logger has a different appender, as described in Table 4‑1.

Table 4‑1 Example loggers and appenders

Logger name |

Logger level |

Appender type |

|---|---|---|

“” (root) |

WARN |

File |

“elt.log.test” |

TRACE |

Console |

This configuration can be useful if, for example, we want to send all logs of severity WARN or higher to the logsink directory, but we are trying to troubleshot a bug in package elt.log.test, therefore we want logs of severity as low as TRACE to be output as well for that specific package.

In certain cases, it is necessary or useful for a developer to be able to change the existing logging configuration at runtime. In the C++ and Python cases, the respective log4cplus and Python logging APIs are unaffected by the CII Log extensions of those frameworks, and appropriate documentation on runtime configuration of the frameworks is available in their respective APIs documentation [4][5][6]. In the case of Java, due to the implementation details of the postmortem buffer, it is not possible to change levels or filters of the loggers or appenders if it is enabled. If the postmortem buffer is not enabled the Log4j API is unaffected and can be modified according to the Log4j API documentation [4]. More details on the limitations on the usage of the Log4 API are described in section 4.9.1.

The example code and configurations for each language and outputs produced by the examples from section 4.4 with these configurations are shown below. These examples can be also found in logging-examples module.

9.4.6.1. Java¶

The example shown in Listing 4‑7 uses the configuration shown in Listing 4‑8.

It can be run from the root directory of the logging-examples module with the run-logging-example-custom-config provided script.

Listing 4‑7 Java example app with custom configuration

public class LoggingBasicExampleCustomConfig {

public static void main(String[] args) {

/* Logging is initialized with custom configuration

* custom-example-log4j2-config.xml located in resources directory.

* This custom configuration has two loggers:

* - root: With a INFO level and a rolling file appender to

* $CIISRV_ROOT/logsink directory

* - "elt.log.test": With a TRACE level, a console appender, and

* additivity enabled

*

* It is also possible to provide the path of the configuration file

* as system property log4j.configurationFile. This can be achieved

* passing it as an argument when running the application:

* $ java -Dlog4j.configurationFile=<path/to/config/file>

* Or setting it programmatically before Log4j is initialized:

* System.setProperty(

* "log4j.configurationFile", "path/to/config/file");

*/

Configurator.initialize("custom-config",

"java/src/resources/custom-example-log4j2-config.xml");

/* Get the root logger.

*/

Logger rootlogger = CiiLogManager.getLogger("");

/* Send INFO level message to root logger

* This log will be output to the log file in logsink directory

*/

rootlogger.log(Level.INFO, "First root logger message");

/* Send TRACE level message to root logger. This log message will be

* discarded due to the root logger having a threshold level of WARN

*/

rootlogger.log(Level.TRACE, "Second root logger message");

/* Get a logger with name 'elt.log.test'

*/

Logger logger = CiiLogManager.getLogger("elt.log.test");

/* Send TRACE level message to elt.log.test logger

* This log will be output to console since the minimum log level is

* TRACE.

* It will be also passed to its parent logger (root) since additivity

* property of this logger is set to true. The root logger will accept

* it also, despite of its level being set to INFO. This is due to the

* fact that the logger level threshold is only checked at the logger

* where the message is created. If a threshold level would be set to

* on the appender attached to the root logger, that one would be

* applied.

*/

logger.log(Level.TRACE, "First elt.log.test logger message");

/* Send ERROR level message to elt.log.test logger

* This log will be output to console since the minimum log level is

* TRACE. It will be also passed to its parent logger (root) since

* additivity property of this logger is set to true. The root logger

* will also pass it to the logsink file appender.

*/

logger.log(Level.ERROR, "Second elt.log.test logger message");

}

}

Listing 4‑8 Log4j custom configuration

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<CiiSimpleLayout/>

</Console>

<RollingFile name="FileLogsink" append="true"

createOnDemand="true"

fileName="${env:CIISRV_ROOT}/logsink/custom-example.log"

filePattern=" ${env:CIISRV_ROOT}/logsink/custom-example.%i.log">

<Policies>

<SizeBasedTriggeringPolicy/>

</Policies>

<CiiLayout/>

</RollingFile>

</Appenders>

<Loggers>

<Logger name="elt.log.test" level="TRACE" additivity="true">

<AppenderRef ref="Console"/>

</Logger>

<Root level="INFO">

<AppenderRef ref="FileLogsink"/>

</Root>

</Loggers>

</Configuration>

This configuration would produce the following output on console:

2020-04-19T23:32:38.600+0200, TRACE, elt.log.examples.LoggingBasicExampleCustomConfig/main, First elt.log.test logger message

2020-04-19T23:32:38.600+0200, ERROR, elt.log.examples.LoggingBasicExampleCustomConfig/main, Second elt.log.test logger message

And the following output on the the custom-example.log file in the logsink directory:

Cref=LoggingBasicExampleCustomConfig.java-38, Date=2020-04-19T23:32:38.597+0200, HostName=docker-eso-eelt, LogType=INFO, SourceID=elt.log.examples.LoggingBasicExampleCustomConfig/main, LogID=.main-38, Message=First root logger message

Cref=LoggingBasicExampleCustomConfig.java-58, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=TRACE, SourceID=elt.log.examples.LoggingBasicExampleCustomConfig/main, LogID=elt.log.test.main-58, Message=First elt.log.test logger message

Cref=LoggingBasicExampleCustomConfig.java-66, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID=elt.log.examples.LoggingBasicExampleCustomConfig/main, LogID=elt.log.test.main-66, Message=Second elt.log.test logger message

9.4.6.2. C++¶

This example shown in Listing 4‑9 can be run with the $CIISRV_ROOT/bin/logging-example-customconfig-app executable file installed by the logging-examples module.

Listing 4‑9 C++ example app with custom configuration

int main(int ac, char *av[]) {

try {

/*

* Logging is initialized with custom configuration.

*

* This custom configuration has two loggers:

* - root: With a INFO level and a rolling file appender to

* $CIISRV_ROOT/logsink directory

* - "elt.log.test": With a TRACE level, a console appender, and

* additivity enabled

*/

::elt::log::CiiLogManager::Configure("log4cplus-test.prop");

/* Get the root logger.*/

log4cplus::Logger root_logger = ::elt::log::CiiLogManager::GetLogger();

/* Send INFO level message to root logger

* This log will be output to the log file in logsink directory

*/

root_logger.log(log4cplus::INFO_LOG_LEVEL, "First root logger message");

/* Send TRACE level message to root logger. This log message will be

* discarded due to the root logger having a threshold level of INFO

*/

root_logger.log(log4cplus::TRACE_LOG_LEVEL, "Second root logger message");

/* Get a logger with name 'elt.log.test' */

log4cplus::Logger logger = ::elt::log::CiiLogManager::GetLogger("elt.log.test");

/*

* Send TRACE level message to elt.log.test logger

* This log will be outputed to console since the minimum log level is

* TRACE.

* It will be also passed to its parent logger (root) since additivity

* property of this logger is set to true. The root logger will accept it

* also, despite of its level being set to INFO. This is due to the fact

* that the logger level threshold is only checked at the logger where the

* message is created.

* If a threshold level would be set to on the appender attached to the

* root logger, that one would be applied.

*/

logger.log(log4cplus::TRACE_LOG_LEVEL,

"First elt.log.test logger message");

/*

* Send ERROR level message to elt.log.test logger

* This log will be output to console since the minimum log level is

* TRACE.

* It will be also passed to its parent logger (root) since additivity

* property of this logger is set to true. The root logger will also pass

* it to the logsink file appender.

*/

logger.log(log4cplus::ERROR_LOG_LEVEL,

"Second elt.log.test logger message");

return 0;

} catch (const ::elt::error::CiiException& ex) {

std::cerr << "CiiException occured while executing sample code. What: "

<< ex.what() << '\n';

}

return -1;

Listing 4‑10 Log4cplus custom configuration

log4cplus.rootLogger=INFO, ROLLINGFILE

log4cplus.logger.elt.log.test=TRACE, STDOUT

log4cplus.additivity.elt.log.test=TRUE

log4cplus.appender.STDOUT=log4cplus::ConsoleAppender

log4cplus.appender.STDOUT.layout=elt::log::layout::CiiSimpleLayout

log4cplus.appender.ROLLINGFILE=log4cplus::RollingFileAppender

log4cplus.appender.ROLLINGFILE.File=/home/rfernandez/INTROOT/logsink/custom-example.log

log4cplus.appender.ROLLINGFILE.MaxFileSize=16MB

log4cplus.appender.ROLLINGFILE.MaxBackupIndex=1

log4cplus.appender.ROLLINGFILE.layout=elt::log::layout::CiiLayout

This configuration would produce the following output on console:

2020-04-19T23:32:38.600+0200, TRACE, elt.log.test/139781441696448, First elt.log.test logger message

2020-04-19T23:32:38.600+0200, ERROR, elt.log.test/139781441696448, Second elt.log.test logger message

And the following output on the the custom-example.log file in the logsink directory:

Cref=../cpp/logging-customconfig-app/src/logging-customconfig-app.cpp-105, Date=2020-04-19T23:32:38.597+0200, HostName=docker-eso-eelt, LogType=INFO, SourceID=139781441696448, LogID=root.main-105, Message=First root logger message

Cref=../cpp/logging-customconfig-app/src/logging-customconfig-app.cpp-125, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=TRACE, SourceID=139781441696448, LogID=elt.log.test.main-125, Message=First elt.log.test logger message

Cref=../cpp/logging-customconfig-app/src/logging-customconfig-app.cpp-134, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID=139781441696448, LogID=elt.log.test.main-134, Message=Second elt.log.test logger message

9.4.6.3. Python¶

This example shown in Listing 4‑11 can be run with the $CIISRV_ROOT/bin/cii-logging-example-customconfig-app-py executable file installed by the logging-examples module.

Listing 4‑11 Python example app with custom configuration

def main():

"""

Main application code

@return status code, int

"""

result = 0

try:

# Logging is initialize with custom configuration

#

# This custom configuration has two loggers:

# - root: With a INFO level and rolling file handler to

# $CIISRV_ROOT/logsink directory

# - "elt.log.test": With a TRACE level, a console appender and

# propagation enabled

CiiLogManager.configure(CONFIG_FILE_NAME)

# Get the root logger

root_logger = CiiLogManager.get_logger()

# Send INFO level message to root logger

# This log will be forwarded to the lof file in logsink directory

root_logger.info("First root logger message")

# Send TRACE level message to root logger.

# This log message will be discarded due to the

# root logger having ad threshold level of INFO

root_logger.trace("Second root logger message")

# Get a logger with name 'elt.log.test'

logger = CiiLogManager.get_logger("elt.log.test")

# Send a TRACE level message to elt.log.test logger

# This log will be forwarded to console since the minimum log level is

# TRACE.

# It will be also passed to its parent logger (root) since propagate

# property of this logger is set to True (by default). The root logger

# will also pass it to the logsink file handler. This is due to the

# fact that the logger level threshold is only checked at the logger

# where the message is created.

logger.trace("First elt.log.test logger message")

# Send ERROR level message to elt.log.test logger

# This log will be displayed on console since the minimum log level is

# TRACE.

# It will be also passed to its parent logger (root) since propagate

# property of this logger is set to True (by default). The root logger

# will also pass it to the logsing file handler.

logger.error("Second elt.log.test logger message")

except Exception as e:

print('Exception occured while executing sample code: %s', e)

result = 5

return result

if __name__ == '__main__':

sys.exit(main())

Listing 4‑12 Python logging custom configuration

{

"version": 1,

"handlers": {

"logsink": {

"class": "logging.handlers.RotatingFileHandler",

"backupCount": 5,

"filename": "CII_LOGSINK_FILENAME",

"formatter": "cii",

"maxBytes": 10485760

},

"console": {

"class": "logging.StreamHandler",

"formatter": "cii-brief",

"stream": "ext://sys.stdout"

}

},

"root": {

"handlers": [

"logsink"

],

"level": "INFO"

},

"loggers": {

"elt.log.test": {

"handlers": [

"console"

],

"level": "TRACE"

}

}

}

This configuration would produce the following output on console:

2020-04-19T23:32:38.600+0200, TRACE, cii-logging-example-customconfig-app-py/MainThread, First elt.log.test logger message

2020-04-19T23:32:38.600+0200, ERROR, cii-logging-example-customconfig-app-py/MainThread, Second elt.log.test logger message

And the following output on the eelt-dev-output.log file in the logsink directory:

Cref= cii-logging-example-customconfig-app-py.main-92, Date=2020-04-19T23:32:38.597+0200, HostName=docker-eso-eelt, LogType=INFO, SourceID= cii-logging-example-customconfig-app-py/MainThread, LogID=main-92, Message=First root logger message

Cref= cii-logging-example-customconfig-app-py.main-108, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=TRACE, SourceID= cii-logging-example-customconfig-app-py/MainThread, LogID=main-108, Message=First elt.log.test logger message

Cref= cii-logging-example-customconfig-app-py.main-115, Date=2020-04-19T23:32:38.600+0200, HostName=docker-eso-eelt, LogType=ERROR, SourceID= cii-logging-example-customconfig-app-py/MainThread, LogID=main-115, Message=Second elt.log.test logger message

9.4.7. Adding optional information to log messages¶

Some of the fields in a CII log message are optional and have to be explicitly added to a log event by the user. As explained in section 4.2, the CII Log Client API, through the CiiLogMessageBuilder, provides a mechanism to create log messages with this additional information using a builder pattern.

This optional information can be added to a log message:

Audience: The intended audience of the log message

UserLogID: A log ID provided by the user.

Formatted CiiException: A CII Exception [3] provided by the user that will be formatted into the fields:

ErrorMessage

ErrorTypeName

ErrorDateTime

ErrorTrace

Tracing info: The builder is responsible for extracting the following data from an existing active Tracing context:

TraceID

SpanID

Once the optional parameters are defined, the user can call the build() method on the builder to produce the formatted string with the selected optional fields.

The builder also provides convenience factory methods for the most common logging use cases: setting a log’s audience and logging a formatted CII Error.

Support for tracing information is currently not available for C++ and Python. It will be added in following releases.

Complete API can be found in Appendix A.

Sections 4.7.1, 4.7.2, 4.7.3 show usage examples for the CiiLogMessageBuilder on Java, C++, and Python. All examples follow the same workflow.

First, the language-specific logging frameworks are initialized with the default CII configuration and a logger named myLogger is retrieved.

(How to enable tracing to be added in following versions)

Then, the following logs with optional information are produced:

A log with a message, a custom user ID, and an audience value.

A log with a message and a formatted CII exception using the builder pattern.

A log with a message and a formatted CII exception using the builder’s convenience method.

A log with a message and an audience value using the builder’s convenience method.

A log without message and a formatted CiiException. Since no message is passed, the CiiException error message will be used as log message field.

A log with current tracing context information. Note that these examples will not output any tracing information, since tracing is not enabled. These examples are meant to illustrate the usage of the CiiLogMessageBuilder.

9.4.7.1. Java¶

The example shown in Listing 4‑13 can be run from the root directory of the logging-examples module with the run-logging-example-message-builder-java provided script.

Listing 4‑13 Adding optional info to logs (Java)

package elt.log.examples;

import elt.error.CiiException;

import elt.error.CiiInvalidTypeException;

import elt.log.CiiLogAudience;

import elt.log.CiiLogMessageBuilder;

import org.apache.logging.log4j.Logger;

import elt.log.CiiLogManager;

public class LoggingMessageBuilderExample {

public static void main(String[] args) {

//initialize Log4j with default CII configuration and retieve

//"myLogger" Logger

Logger logger = CiiLogManager.getLogger("myLogger");

//Build and log message with message value, user ID and DEVELOPER

//audience

logger.error(CiiLogMessageBuilder.create("Message value")

.withUserLogId("user_log_id")

.withAudience(CiiLogAudience.DEVELOPER)

.build());

CiiException e =

new CiiInvalidTypeException("Exception error message");

//Build and log message with formatted CiiException using builder

//pattern

logger.error(CiiLogMessageBuilder.create()

.withMessage("This is and exception")

.withCiiException(e)

.build());

//Build and log message with formatted CiiException using the

//convenience method

logger.error(CiiLogMessageBuilder.createAndBuildWithException(

"This is an exception", e));

//Build and log message with DEVELOPER audience

logger.error(CiiLogMessageBuilder.createAndBuildWithAudience(

"Message for DEVELOPER audience", CiiLogAudience.DEVELOPER));

//Build and log message with formatted CiiException using the

//convenience method without passing a message field value

logger.error(

CiiLogMessageBuilder.createAndBuildWithException(null, e));

//Build and log a message with tracing context information

logger.error(CiiLogMessageBuilder.create(

"This is a log message with tracing info")

.withTracingInfo()

.build());

}

}

The log output from this code, using the CiiSimpleLayout, would be the following:

Listing 4‑14 Output CiiLogMessageBuilder usage example Java

2020-03-30T10:05:46.377+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, Message value, Audience=DEVELOPER, UserLogID=user_log_id

2020-03-30T10:05:46.401+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, This is and exception, ErrorTypeName=elt.error.CiiInvalidTypeException, ErrorDateTime=1585523146401, ErrorMessage=Invalid type detected Exception error message, ErrorTrace=elt.error.CiiInvalidTypeException

at elt.log.examples.LoggingMessageBuilderExample.main(LoggingMessageBuilderExample.java:23)

2020-03-30T10:05:46.402+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, This is an exception, ErrorTypeName=elt.error.CiiInvalidTypeException, ErrorDateTime=1585523146401, ErrorMessage=Invalid type detected Exception error message, ErrorTrace=elt.error.CiiInvalidTypeException

at elt.log.examples.LoggingMessageBuilderExample.main(LoggingMessageBuilderExample.java:23)

2020-03-30T10:05:46.402+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, Message for DEVELOPER audience, Audience=DEVELOPER

2020-03-30T10:05:46.402+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, Invalid type detected Exception error message, ErrorTypeName=elt.error.CiiInvalidTypeException, ErrorDateTime=1585523146401, ErrorMessage=Invalid type detected Exception error message, ErrorTrace=elt.error.CiiInvalidTypeException

at elt.log.examples.LoggingMessageBuilderExample.main(LoggingMessageBuilderExample.java:23)

2020-03-30T10:05:46.403+0200, ERROR, elt.log.examples.LoggingMessageBuilderExample/main, This is a log message with tracing info

9.4.7.2. C++¶

This example can be run with the $CIISRV_ROOT/bin/logging-example-messagebuilder executable file installed by the logging-examples module.

Listing 4‑15 Adding optional info to logs (C++)

int main(int ac, char *av[]) {

try {

log4cplus::Logger logger = ::elt::log::CiiLogManager::GetLogger("myLogger");

//Build and log message with message value, user ID and DEVELOPER audience

logger.log(log4cplus::ERROR_LOG_LEVEL,

::elt::log::CiiLogMessageBuilder::Create().WithMessage("Message value")

.WithUserLogId("user_log_id")

.WithAudience(::elt::log::CiiLogAudience::DEVELOPER)

.Build());

try {

CII_THROW(::elt::error::CiiException, "TEST EXCEPTION");

} catch (const elt::error::CiiException& e) {

//Build and log message with formatted CiiException using builder

//pattern

logger.log(log4cplus::ERROR_LOG_LEVEL,

::elt::log::CiiLogMessageBuilder::Create()

.WithMessage("This is and exception")

.WithException(e)

.Build());

//Build and log message with formatted CiiException using the

//convenience method

logger.log(log4cplus::ERROR_LOG_LEVEL,

::elt::log::CiiLogMessageBuilder::CreateAndBuildWithException(

"This is an exception", e));

}

//Build and log message with DEVELOPER audience

logger.log(log4cplus::ERROR_LOG_LEVEL,

::elt::log::CiiLogMessageBuilder::CreateAndBuildWithAudience(

"Message for DEVELOPER audience",

::elt::log::CiiLogAudience::DEVELOPER));

//Build and log a message with tracing context information

logger.log(log4cplus::ERROR_LOG_LEVEL,

::elt::log::CiiLogMessageBuilder::Create(

"This is a log message with tracing info")

.WithTracingInfo(true).Build());

return 0;

} catch (const ::elt::error::CiiException& ex) {

std::cerr << "CiiException occured while executing sample code. What: "

<< ex.what() << '\n';

}

return -1;

}

The output of the code in Listing 4‑15 using the CiiSimpleLayout would be the following:

Listing 4‑16 Output CiiLogMessageBuilder usage example C++

2020-04-19T21:56:56.130+0200, ERROR, myLogger/140218499314368, Message value, Audience=DEVELOPER, UserLogID=user_log_id

2020-04-19T21:56:56.303, ERROR, myLogger/140218499314368, This is and exception, ErrorTypeName=elt::error::CiiException, ErrorDateTime=1587333416, ErrorMessage=TEST EXCEPTION, ErrorTrace= 0# elt::error::CiiException::CiiException(std::string const&) at ../elt-common/cpp/error/client/src/ciiException.cpp:29

1# main at ../cpp/logging-messagebuilder-app/src/logging-messagebuilder-app.cpp:25

2# __libc_start_main in /lib64/libc.so.6

3# 0x0000000000414039 in cii-logging-example-messagebuilder-app

2020-04-19T21:56:56.303+0200, ERROR, myLogger/140218499314368, This is an exception, ErrorTypeName=elt::error::CiiException, ErrorDateTime=1587333416, ErrorMessage=TEST EXCEPTION, ErrorTrace= 0# elt::error::CiiException::CiiException(std::string const&) at ../elt-common/cpp/error/client/src/ciiException.cpp:29

1# main at ../cpp/logging-messagebuilder-app/src/logging-messagebuilder-app.cpp:25

2# __libc_start_main in /lib64/libc.so.6

3# 0x0000000000414039 in cii-logging-example-messagebuilder-app

2020-04-19T21:56:56.303+0200, ERROR, myLogger/140218499314368, Message for DEVELOPER audience, Audience=DEVELOPER

2020-04-19 21:56:56.303, ERROR, myLogger/140218499314368, This is a log message with tracing info

9.4.7.3. Python¶

This example can be run with the $CIISRV_ROOT/bin/cii-logging-example-messagebuilder-py executable file installed by the logging-examples module.

Listing 4‑17 Adding optional info to logs (Python)

def main():

"""

Main application code

@return status code, int

"""

result = 0

try:

logger = CiiLogManager.get_logger("myLogger")

# Build and log message with message value, user ID and DEVELOPER

# audience

logger.error(CiiLogMessageBuilder()

.with_message("Message value")

.with_user_log_id("user_log_id")

.with_audience(CiiLogAudience.DEVELOPER)

.build())

try:

raise CiiException("TEST EXCEPTION")

except CiiException as e:

# Build and log message with formatted exception using builder

# pattern

logger.error(CiiLogMessageBuilder.create()

.with_message("This is an exception")

.with_exception(e)

.build())

# Build and log message with formatted exception using convenience

# method

logger.error(CiiLogMessageBuilder

.create_and_build_with_exception("This is an excaption", e))

# Build and log message with DEVELOPER audience

logger.error(CiiLogMessageBuilder

.create_and_build_with_audience("Message for DEVELOPER audience", CiiLogAudience.DEVELOPER))

# Build and log a message with tracing context information

logger.error(CiiLogMessageBuilder

.create("This is a log message with tracing info")

.with_tracing_info(True)

.build())

# Build and log a message with keyword args

logger.error(CiiLogMessageBuilder

.create_and_build_with_args(message='This is a message',

user_log_id='USER_LOG_ID',

audience=CiiLogAudience.DEVELOPER))

except Exception as e:

print('Exception occured while executing sample code: %s', e)

result = 5

return result

if __name__ == '__main__':

sys.exit(main())

The output of the code in Listing 4‑17 using the CiiSimpleLayout would be the following:

Listing 4‑18 Output CiiLogMessageBuilder usage example Python

2020-04-19T21:56:56.130+0200, ERROR, cii-logging-example-messagebuilder-app-py/MainThread, Message value, Audience=DEVELOPER, UserLogID=user_log_id

2020-04-19T21:56:56.303+0200, ERROR, cii-logging-example-messagebuilder-app-py/MainThread, This is an exception, ErrorTypeName=elt.error.Exceptions.CiiException, ErrorDateTime=1588727172250, ErrorMessage=TEST EXCEPTION, ErrorTrace=Traceback (most recent call last):

File "/home/rfernandez/INTROOT/bin/cii-logging-example-messagebuilder-app-py", line 30, in main

raise CiiException("TEST EXCEPTION")

elt.error.Exceptions.CiiException: TEST EXCEPTION

2020-04-19T21:56:56.303+0200, cii-logging-example-messagebuilder-app-py/MainThread, This is an excaption, ErrorTypeName=elt.error.Exceptions.CiiException, ErrorDateTime=1588727172250, ErrorMessage=TEST EXCEPTION, ErrorTrace=Traceback (most recent call last):

File "/home/rfernandez/INTROOT/bin/cii-logging-example-messagebuilder-app-py", line 30, in main

raise CiiException("TEST EXCEPTION")

elt.error.Exceptions.CiiException: TEST EXCEPTION

2020-04-19T21:56:56.303+0200, ERROR, cii-logging-example-messagebuilder-app-py/MainThread, Message for DEVELOPER audience, Audience=DEVELOPER

2020-04-19T21:56:56.303+0200, ERROR, cii-logging-example-messagebuilder-app-py/MainThread, This is a log message with tracing info

2020-04-19T21:56:56.303+0200, ERROR, cii-logging-example-messagebuilder-app-py/MainThread, This is a message, Audience=DEVELOPER, UserLogID=USER_LOG_ID

9.4.8. String formatting¶

Usage of formatted strings in the printf style is supported in both Java, C++, and Python.

In Java, the String.format method can be used to construct the required message. Also, the Logger instances returned by CiiLogManager.getlogger support string formatting.

The following examples would produce a log with the message “Number: 100”.

logger.debug(String.format("Number: %d", 100));

logger.debug("Number: %d", 100);

In C++ it is supported through the LOG4CPLUS_<LEVEL>_FMT macros [20], as shown in the following example:

LOG4CPLUS_WARN_FMT(root, "Number: %d", 100);

In Python it is also supported using the language’s regular string formatting constructs.

logger.error("Number: %d", 100)

logger.error("Number: %d" % 100)

9.4.8.1. Compile-time validation¶

Compile-time validation of the formatted strings is supported for Java and for C++.

In the C++ case, compile time validation is automatically done if the LOG4CPLUS_<LEVEL>_FMT macros [20] are used.

In Java, this is supported through the Checker Framework [17]. This solution doesn’t only apply to formatted Strings used in log messages, but to any formatted string in the source code.

The Format String Checker prevents the use of incorrect format strings in format methods such as System.out.printf, String.format, or the Logger log methods. It warns the developer if an invalid format string is written, and it warns if the other arguments are not consistent with the format string (in number of arguments or in their types).

Checker Framework library is installed in the system as part of srv-support-libs when client-apis module is installed. Once installed it can be used during compilation as shown in the example below that compiles class TestUnitLogStringCheckerTest.java:

export CHECKERFRAMEWORK=$CIISRV_ROOT/lib/srv-support-libs/checker-framework-3.2

javac -J-Xbootclasspath/p:$CHECKERFRAMEWORK/javac.jar \

-Xbootclasspath/p:$CHECKERFRAMEWORK/jdk8.jar \

-cp $CHECKERFRAMEWORK/checker-qual.jar:$CIISRV_ROOT/lib/srv-support-libs/*:$CIISRV_ROOT/lib/ \

-processorpath $CHECKERFRAMEWORK/checker.jar \

-processor org.checkerframework.checker.formatter.FormatterChecker \

-Astubs=$CHECKERFRAMEWORK/Logger.astub \

-source 8 -target 8 TestUnitLogStringCheckerTest.java

In Python this is not supported, since it is not compiled.

9.4.9. Using the Postmortem Buffer¶

This section describes the usage of the postmortem buffer. By default, this buffer is disabled and it is the responsibility of the user to enable it.

The postmortem buffer is configured through two environment variables:

CII_LOG_BUFFER_ON: If set to true, the postmortem buffer will enabled. If not set, or set to any other value, the postmortem buffer will be disabled.

CII_LOG_BUFFER_TTL_MS: Time-To-Live in milliseconds of the buffered log events.

Changes to these environment variables, once the logging has been initialized, will not have any effect on the current configuration. In order for it to be applied, logging must be shut down and initialized again.

Programatic and file-based configurations of the postmortem buffer will take precedence over these environment variables.

Due to the differences in implementations and APIs of the logging frameworks CII Log Client API leverages on, the implementation and usage of the postmortem buffer vary from language to language. Configuration and usage instructions are described for each of the three languages in the following subsections.

Note: Enabling the postmortem buffer can make significant performance impact. The impact depends on the number of produced logs. When it is, enabled, all the logging events (regardless of its level) will gather all the log context information (hostname, line number, file name, etc…). Gathering this information will take significant time, when a lot of logs are produced.

9.4.9.1. Java¶

In addition to the configuration of the postmortem buffer through environment variables, it is possible to configure it using system properties.

cii.log.buffer.on: This property defines if the buffer is enabled or not. By default, it is disabled. Must be set to true to enable the buffer.

cii.log.buffer.ttl.ms: This property defines the TTL value in milliseconds for entries in the buffer. Default is 60000 ms (1 min).

These properties must be set before initialization of the Log4j framework in order for them to have effect.

If any of these system properties are defined, it will override the environment variable for that configuration parameter.

Once the logging framework is initialized, it is not possible to enable or disable the postmortem buffer.

If the postmortem buffer is enabled, once the configuration is initialized it is not possible to perform any changes to the active configuration using the Log4j API. The Logger.getLevel() method or any method to retrieve levels or filters configuration at runtime can return an incorrect value and should not be relied upon.

The example in Listing 4‑19 describes a standard workflow using the postmortem buffer.

In the first place, the cii.log.buffer.on property is set in order to enable the postmortem buffer. Also, the cii.log.buffer.ttl.ms property is set to a custom value of 30 seconds, setting the events TTL in the buffer to that value.

Then, a logger object is obtained with the name of the current class. Since no custom configuration has been defined, it will automatically initialize the logging framework to the default CII configuration. Default configuration only has the root logger configured; therefore, the retrieved object will use the root logger configuration, which filters out logs from levels below ERROR.

After, a log message is produced for every log level, from TRACE to FATAL. Only logs of levels ERROR and FATAL will be logged by the appenders.

Finally, the CiiLogManager.flushBufferedAppender() method is called. This will output all the buffered logs to all appenders that originally rejected them, whether it was filtered out by the logger or by the appender itself. Listing 4‑20 shows the console output of the execution of the example. As can be seen, first, the ERROR and FATAL messages are printed to console, and then, when the postmortem buffer is flushed, the rest of the log messages are printed to the console.

The example shown in Listing 4‑19 can be run from the root directory of the logging-examples module with the run-logging-example-postmortem-buffer-java provided script.

Listing 4‑19 Example postmortem appender (Java)

package elt.log.examples;

import elt.log.CiiLogManager;

import org.apache.logging.log4j.Level;

import org.apache.logging.log4j.Logger;

public class PostmortemAppenderExample {

public static void main(String[] args) {

// Enable the postmortem appender

// This must be done before initializing Log4j

System.setProperty("cii.log.buffer.on", "true");

// Set the time-to-live of the log events in the buffer to 30s

System.setProperty("cii.log.buffer.ttl.ms", "30000");

// Automatically initialize the logging framework with console and

// file appender and get the logger with the name

// elt.log.examples.PostmortemAppenderExample

Logger logger = CiiLogManager.getLogger(

PostmortemAppenderExample.class.getCanonicalName());

// Create a log message of each level. Only messages of levels ERROR

// and FATAL will be output to the console and file

logger.log(Level.TRACE, "Trace message");

logger.log(Level.DEBUG, "Debug message");

logger.log(Level.INFO, "Info message");

logger.log(Level.WARN, "Warn message");

logger.log(Level.ERROR, "Error message");

logger.log(Level.FATAL, "Fatal message");

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

// Flush the postmortem appender. Logs of levels TRACE, DEBUG, INFO,

// and WARN will be now output to the console and file

CiiLogManager.flushPostmortemBuffer();

}

}

This example produces the following output:

Listing 4‑20 Console output from postmortem example (Java)

2020-03-12T22:33:14.524+0100, ERROR, elt.log.examples.PostmortemAppenderExample/main, Error message

2020-03-12T22:33:14.524+0100, FATAL, elt.log.examples.PostmortemAppenderExample/main, Fatal message

2020-03-12T22:33:14.524+0100, TRACE, elt.log.examples.PostmortemAppenderExample/main, Trace message

2020-03-12T22:33:14.524+0100, DEBUG, elt.log.examples.PostmortemAppenderExample/main, Debug message

2020-03-12T22:33:14.524+0100, INFO, elt.log.examples.PostmortemAppenderExample/main, Info message

2020-03-12T22:33:14.524+0100, WARN, elt.log.examples.PostmortemAppenderExample/main, Warn message

9.4.9.1.1. Async Logging¶

Log4j supports asynchronous logging, as described in [11]. It’s important to note that when using custom configurations, the includeLocation property of loggers’ configuration must be explicitly set to true. Otherwise, information as the source code file and line references will be lost. It is also necessary for the postmortem buffer to work as intended since it relies on a log event’s context data, which would otherwise be lost.

This also needs to be taken into consideration when flushing the buffer, since it’s possible that some of the last produced log events might have not been processed yet by the buffer at the time of flushing when asynchronous logging is used.

9.4.9.2. C++¶

In C++, the postmortem buffer can be configured using the environment variables mentioned earlier, as well as programmatically. The CiiLogConfigurator class provides methods to initialize a configuration with the postmortem buffer enabled, as well as a method to enable the postmortem buffer once the configuration has been already initialized.

When the postmortem buffered is flushed, log messages indicating the START and END of the flushed logs will be injected, as can be seen in Listing 4‑22, helping the user identify the logs that were buffered by the postmortem facility.

More details on how to configure the postmortem buffer can be found in Appendix A.2.

A usage example of the postmortem buffer in C++ is shown in Listing 4‑21. In this example, the postmortem buffer is enabled and a log TTL of 30 seconds is set. Then, logging is initialized with the default configuration. A series of log messages of different levels are produced, and finally, the postmortem buffer is flushed to all the configured appenders.

This example can be run with the $CIISRV_ROOT/bin/logging-example-postmortem-buffer-app executable file installed by the logging-examples module.

Listing 4‑21 Example postmortem appender (C++)

int main(int ac, char *av[]) {

try {

//Enable postmortem appender and set the time-to-live of the log events in

//the buffer to 30s

std::string postmortem_enable_env = std::string(

::elt::log::CiiLogManager::POSTMORTEM_APPENDER_ENABLED_ENV_VAR) +

"=true";

putenv(const_cast<char*>(postmortem_enable_env.c_str()));

std::string postmortem_ttl_env = std::string(

::elt::log::CiiLogManager::POSTMORTEM_APPENDER_ENTRYTTL_MS_ENV_VAR) +

"=30000";

putenv(const_cast<char*>(postmortem_ttl_env.c_str()));

// Automatically initialize the logging framework with console and file

// appender and get the logger with the name

// elt.log.examples.PostmortemAppenderExample

log4cplus::Logger logger = ::elt::log::CiiLogManager::GetLogger(

"PostmortemAppenderExample");

// Create a log message of each level

// Only messages of levels ERROR and FATAL will be output to the console

// and file

logger.log(log4cplus::TRACE_LOG_LEVEL, "Trace message");

logger.log(log4cplus::DEBUG_LOG_LEVEL, "Debug message");

logger.log(log4cplus::INFO_LOG_LEVEL, "Info message");

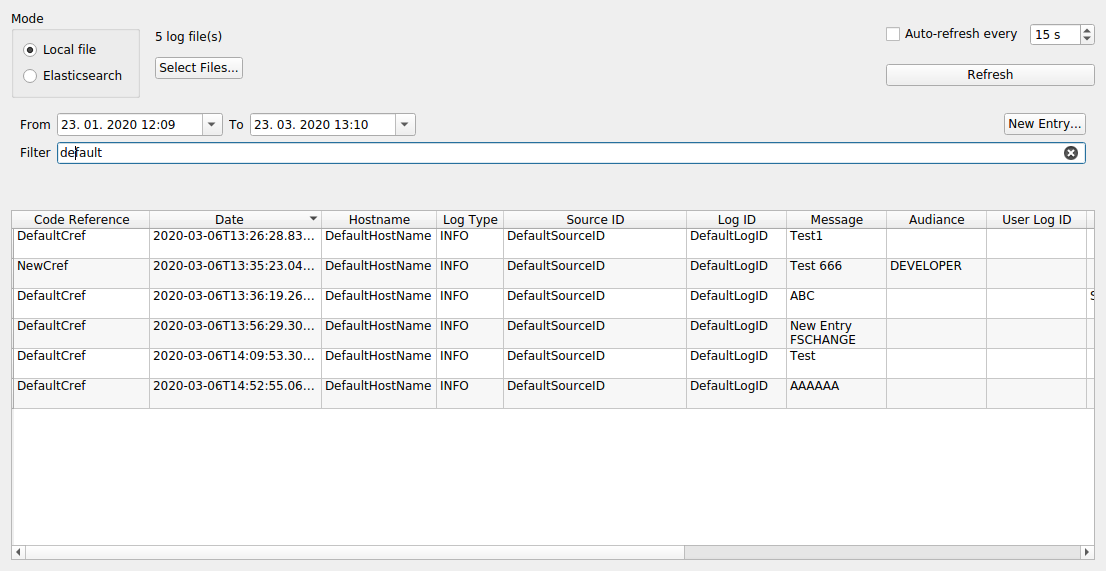

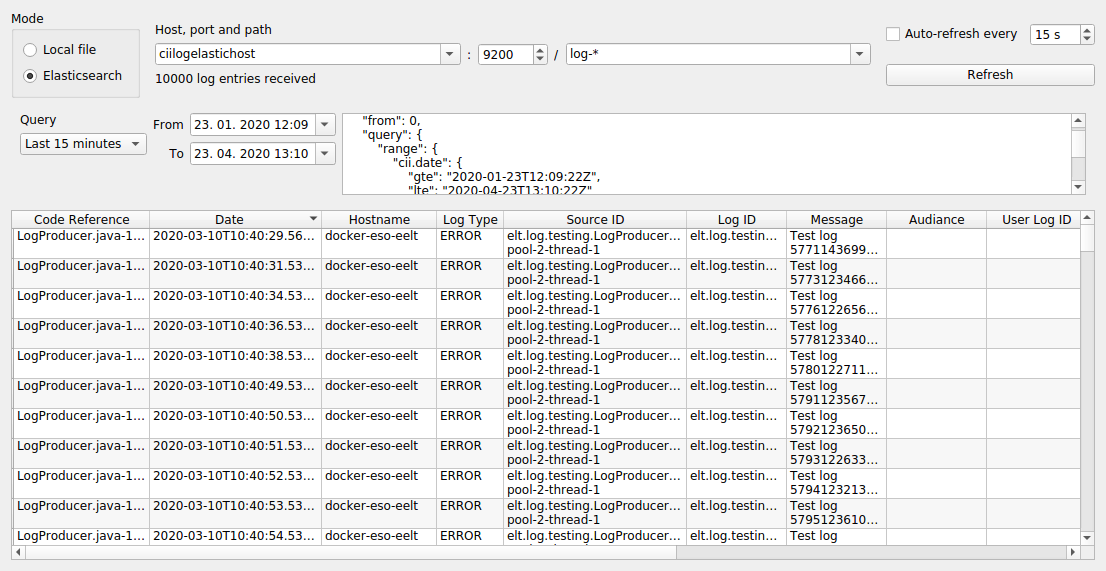

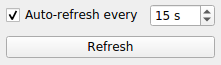

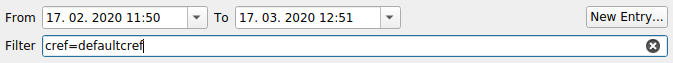

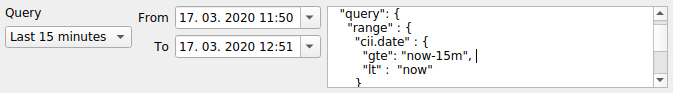

logger.log(log4cplus::WARN_LOG_LEVEL, "Warn message");